Overview of Unified Language-Vision Pretraining in LLM with Dynamic Discrete Visual Tokenization

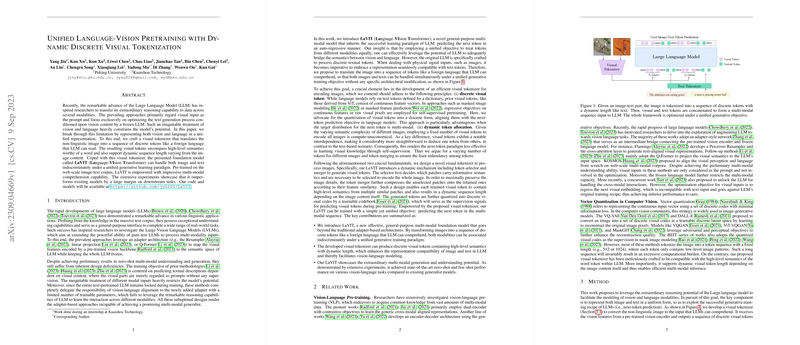

The paper presents a novel approach to integrating vision and language data within the framework of LLMs. Traditional methods often treat visual inputs merely as prompts, leading to suboptimal performance where the LLMs focus solely on text generation. This work proposes a unified model, LaVIT (Language-VIsion Transformer), that addresses these deficiencies by treating both modalities equitably, leveraging a dynamic discrete visual tokenization mechanism.

Key Contributions

LaVIT distinguishes itself by representing images as sequences of discrete tokens, akin to text, facilitating unified processing via LLMs. This is achieved through a sophisticated visual tokenizer that processes images into tokens with dynamic lengths. There are three main contributions highlighted in the paper:

- Unified Representation of Vision and Language: By tokenizing images into discrete tokens, LaVIT adapts them for LLMs without architectural changes. This allows seamless integration of vision and language inputs under a generative learning paradigm.

- Dynamic Tokenization Approach: The dynamic tokenization mechanism efficiently reduces redundancies by selecting and merging visual patches based on their information content. This results in minimized computational overhead by adjusting token sequence lengths according to image complexity.

- Demonstrated Efficacy Across Tasks: LaVIT showcases advanced capabilities in both multi-modal comprehension and generation, outperforming existing models significantly on zero-shot vision-language tasks. The proposed model is validated against various benchmarks, demonstrating state-of-the-art performance.

Technical Insights

The paper introduces a two-stage process: visual tokenizer training and joint vision-LLM pre-training.

- Visual Tokenizer Training: The tokenizer utilizes a token selector and a token merger. The selector identifies informative patches, while the merger compresses unselected patches’ information into retained tokens. These tokens are then quantized using a codebook, ensuring consistent representation with textual data.

- Unified Generative Modeling: The LLM processes combined sequences of text and visual tokens. For comprehending images, continuous features are used directly to harness detailed visual semantics. This dual handling of discrete and continuous representations empowers the seamless transition between modalities.

Experimental Results

LaVIT achieved remarkable results across multiple benchmarks. It significantly outperforms prior models in zero-shot image captioning and visual question answering tasks. The model's ability to handle complex and diverse prompts during multi-modal generation showcases its robust understanding and reasoning potential.

Additionally, the FID scores in text-to-image synthesis are comparable to specialized image generation models, despite using fewer training resources. The qualitative examples demonstrate coherent and contextually relevant image generation across varying multi-modal inputs.

Practical and Theoretical Implications

The presented work has substantial implications for the development of versatile AI systems capable of holistic data processing across multiple modalities. By unifying vision and language under a consistent generative framework, LaVIT broadens the potential applications of LLMs in real-world scenarios.

From a theoretical perspective, the approach challenges existing paradigms by integrating discrete visual representation into LLMs, promoting research into more flexible and unified AI models.

Future Developments

Future research could explore enhancing tokenization techniques to further minimize reliance on large-scale training data. Additionally, fine-tuning stability amid changing data distributions remains a challenge warranting further investigation. The potential integration with other modalities, such as audio, could be investigated, unlocking further capabilities for multi-modal LLMs.

In conclusion, the paper presents a comprehensive method for unified vision and language pretraining in LLMs, significantly advancing the field of multi-modal LLMs through innovative tokenization and training methodologies.