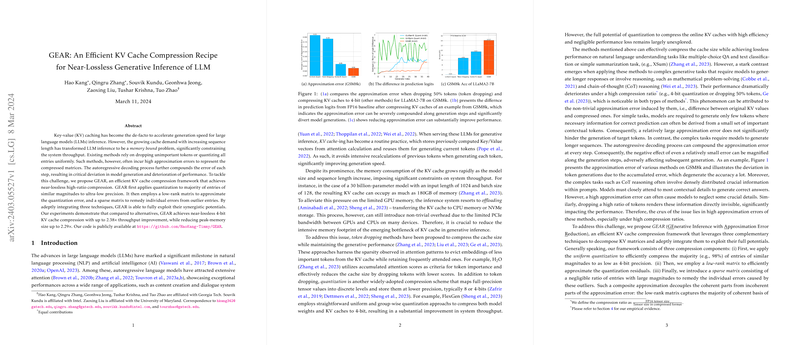

Key–value (KV) caching is the main speed-up technique for autoregressive inference with LLMs, but its memory cost grows linearly with sequence length, quickly becoming the dominant bottleneck on GPUs and PCI-e bandwidth. “GEAR: An Efficient KV Cache Compression Recipe for Near-Lossless Generative Inference of LLM” proposes a lightweight, on-the-fly compression method that shrinks KV memory up to ≈3× while preserving generation quality, even on reasoning-heavy tasks where prior token-dropping or uniform quantization methods fail.

1 Problem setting

- For a 30 B-parameter LLM with sequence length 1024 and batch 128, KV cache can exceed 180 GB.

- Existing approaches

- Token dropping: remove “unimportant” tokens using attention statistics. Works for short or extractive tasks, but loses essential context in long reasoning chains.

- Uniform / groupwise quantization: compress every entry to 8-bit or 4-bit. With many outlier values the quantization error accumulates autoregressively, derailing generation.

Complex tasks (GSM8k, MMLU, BBH) expose this error accumulation; 4-bit uniform schemes collapse to near-zero accuracy.

2 GEAR compression recipe

GEAR approximates each KV matrix X (either K or V per layer) by the sum of three complementary components:

1 2 3 |

X ≈ Q + L + S

| | |

4-bit quant low-rank sparse outliers |

- Uniform Quantization (Q) ≈98 % of entries, 4-bit per value. Hardware-friendly INT4/INT8 path.

- Low-rank residual (L)

Compute residual R = X − Q − S; obtain top-r singular components via one-step power iteration (

SVDSolver_r). Typical rank r = 2 – 5 % × min(n,d) (e.g., 5-10 for n=2048, d=4096). Captures coherent residual structure shared across tokens. - Sparse correction (S) Extract the largest s % positive and negative outliers (default s = 2 %). Stored as 16-bit values + INT32 indices.

The three pieces remove different error modes: Q handles bulk similarity, L captures low-frequency token-wise patterns, S patches extreme per-cell deviations. An ablation shows dropping either L or S degrades GSM8k accuracy from 15.7 % to ≈2 %.

Streaming implementation

To hide the latency of SVD and sparse packing, GEAR buffers the latest b tokens (default b = 20) in FP16. Compression is performed only when the buffer is full, amortising extra kernels and keeping additional memory below 2 %.

3 Practical algorithm

1 2 3 4 5 6 7 8 |

For each generation step t:

• Append new k_t, v_t to small FP16 buffer B

• If |B| == b:

For both K and V:

S = top/bottom s% entries of (K∥B)

Q = Quant4( (K∥B) - S )

L = PowerIter_SVD_r( (K∥B) - Q - S )

Store compressed (Q,L,S); clear buffer |

Compression ratio ≈ 1 / (b/16 + 3s + (n+d)/(max(n,d)) ρ) (ρ = r/min(n,d)). With b=4, s=0.02, ρ=0.02 → ≈3 × size reduction.

4 Experiments

Models: LLaMA-2 7 B, LLaMA-2 13 B, Mistral-7 B (FP16 weights). Tasks: GSM8k, MMLU, BBH, WikiText-2; Chain-of-Thought (CoT) and zero-shot variants.

| Method @4-bit | KV ratio | GSM8k-CoT (7B) | MMLU-CoT (7B) | GSM8k-CoT (13B) |

|---|---|---|---|---|

| Uniform | 4× | 0 % | 0 % | 0 % |

| Group-wise | 4× | 1.4 % | 11.9 % | 2.1 % |

| Outlier-red (s = 10 %) | 1.8× | 11.2 % | 40.7 % | 21.3 % |

| GEAR (s = 2 %, ρ = 5 %) | 2.6× | 15.7 % | 44.5 % | 28.0 % |

- GEAR is within <0.5 % absolute of FP16 on most datasets.

- Average accuracy gain vs best baseline: +5.8 % at 2.6× compression.

- On zero-shot GSM8k (7B-chat) accuracy drops only 0.4 pp versus FP16.

System-level: evaluated on Zig-Zag GPU/CPU offloading scheduler (RTX Titan 24 GB). 4-bit GEAR raises tokens/s throughput 2.38× and peak GPU memory is cut 2.29×, enabling 1.7× larger batch sizes or context windows.

5 Implementation notes

- Quantization: standard per-tensor INT4 with de/scale on-chip; can reuse INT8 kernels.

- Sparse outliers: store int32 row/col + fp16 value; CSR faster than COO.

- Low-rank: single power-iteration (2-3 GEMMs) per compress cycle; negligible for b=20.

- Works as drop-in wrapper around HuggingFace

generate()loop; reference PyTorch code released.

6 Ablations and insights

- Compression ratio sweep shows GEAR still near-lossless at 3.4×; baselines collapse.

- GEAR vs token dropping (H₂O): on GSM8k 50 % drop → 6.8 % Acc; GEAR 3× → 14.2 %.

- Fine-tuned GSM8k model: GEAR (ρ = 10 %) recovers 97 % of FP16 accuracy.

- Rank and sparsity sensitivity: sweet spot around s = 1–2 %, ρ = 2–5 %.

7 Limitations & future work

- Additional index storage means effective ratio plateaus beyond ≈4×.

- Assumes residual error is low-rank; may be less effective on highly entropic activations (e.g., early training checkpoints).

- Custom fused kernels for

(Q+L+S)·Qᵗattention could further reduce compute overhead.

8 Takeaways for practitioners

- Plug-in GEAR to inference loops to cut KV memory 2-3× with <1 % quality loss on reasoning tasks.

- Use s ≈ 2 %, ρ ≈ 0.02, buffer b ≈ 20 as safe defaults.

- Combine with 8-bit or 4-bit weight quantization and offloading frameworks (FlexGen/DeepSpeed-Z3) for maximal GPU memory savings.

- Minimal engineering: only INT4 quantization and one small SVD per buffer flush; no retraining, no custom CUDA required.

The paper demonstrates that carefully mixing quantization, low-rank, and sparse corrections provides a sweet spot—achieving “near-lossless” generation accuracy while significantly accelerating and scaling LLM inference workloads.