LLaVA-UHD: Efficiently Handling Any Aspect Ratio and High-Resolution Images in Large Multimodal Models

Introduction

The capabilities of multimodal understanding, reasoning, and interaction witnessed substantial advancements, which is largely attributed to the integration of visual signals into LLMs. This integration hinges on efficient and adaptive visual encoding strategies. Current Large Multimodal Models (LMMs), however, fall short in efficiently handling images of varying aspect ratios and high resolutions, which is paramount for real-world applications. This paper introduces LLaVA-UHD, a novel LMM that efficiently processes images in any aspect ratio and high resolution. LLaVA-UHD addresses the highlighted shortcomings via an innovative image modularization strategy, a compression module, and a spatial schema for slice organization.

Systematic Flaws in Existing Models

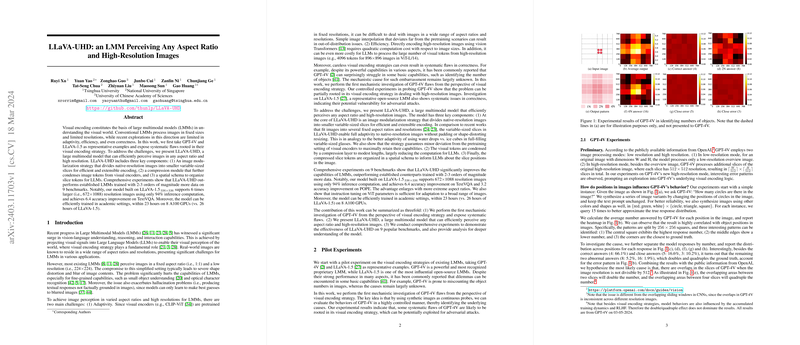

Investigating GPT-4V and LLaVA-1.5, the paper identifies their systematic flaws in visual encoding, particularly in correctly perceiving high-resolution images. The findings underscore a potential vulnerability to adversarial attacks, emphasizing the need for improved visual encoding strategies.

Core Components of LLaVA-UHD

- Image Modularization Strategy: This component divides native-resolution images into smaller, variable-sized slices, adapting efficiently to any aspect ratio and resolution. Unlike previous methods relying on fixed aspect ratios, LLaVA-UHD's approach ensures full adaptivity with minimal deviation from the visual encoders' pretraining settings.

- Compression Module: To manage the processing demands of high-resolution images, a compression layer further condenses image tokens, reducing the computational load on LLMs.

- Spatial Schema: A novel spatial schema organizes slice tokens, providing LLMs with contextual information about slice positions within the image. This aids the model in understanding the global structure of the image from its parts.

Experimental Findings

LLaVA-UHD demonstrates superior performance across nine benchmarks, outstripping existing models trained on significantly larger datasets. Noteworthy improvements include a 6.4 point increase in accuracy on TextVQA and a 3.2 point increase on POPE when comparing to LLaVA-1.5. Moreover, it supports images six times larger in resolution while requiring only 94% of LLaVA-1.5's inference computation.

Practical Implications and Theoretical Significance

LLaVA-UHD's approach contributes to the broader field of AI by offering an efficient solution for processing high-resolution images within LMMs, without sacrificing performance or computational efficiency. The model's adaptability to any aspect ratio and resolution reflects a significant step toward handling real-world images more effectively.

Future Directions

The paper hints at future exploration into encoding higher-resolution images and tasks such as small object detection, emphasizing the need for continued advancement in visual encoding strategies within multimodal systems.

Conclusion

LLaVA-UHD represents a critical advancement in the visual perception capabilities of LMMs. By addressing the fundamental limitations around aspect ratio adaptability and the processing of high-resolution images, the model sets a new benchmark for efficiency and accuracy in multimodal AI systems.