Enhancing LLM Reasoning through Knowledge Graph-Integrated Collaboration

Introduction

Recent advancements in LLMs have set new benchmarks across various NLP tasks. Despite these achievements, challenges such as hallucinations, timely knowledge updating, and reasoning transparency persist, undermining the practical application of LLMs. This paper presents an innovative approach to address these issues by tightly integrating Knowledge Graphs (KGs) and LLMs, facilitating enhanced reasoning capabilities and transparent output traceability.

Methodology

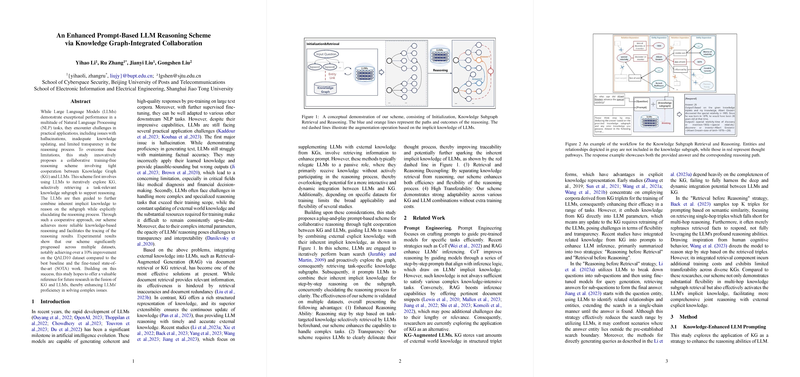

The cornerstone of this approach is a cooperative, training-free scheme where LLMs iteratively explore KGs, retrieving task-relevant knowledge subgraphs that then serve as a basis for reasoned output. This method involves three main phases:

- Initialization: Identifying key entities within the input question to anchor the subsequent search in the KG.

- Knowledge Subgraph Retrieval: Through a beam search mechanism, the method expands across relations and entities within the KG, constructing a subgraph rich in contextually relevant knowledge.

- Reasoning: Utilizing this subgraph, the LLM then engages in a step-by-step reasoning process, where it articulates the reasoning path, leveraging its inherent knowledge alongside the explicitly derived insights from the KG.

This innovative scheme ensures the LLM's reasoning is both knowledge-based and transparent, addressing significant limitations of current models.

Experimental Setup and Results

The evaluation of this scheme was conducted over diverse datasets with tasks ranging from question answering to fact-checking, comparing baselines like the GPT-3.5-turbo with and without external knowledge integration. Notably, the scheme achieved more than a 10% improvement over the best baseline and fine-tuned state-of-the-art (SOTA) on the QALD10 dataset. Such results underscore the scheme's capability to significantly enhance LLM reasoning accuracy by incorporating external KGs.

Implications and Future Directions

The practical implications of this research are profound. By integrating KGs with LLMs, we can substantially minimize hallucinations and inaccuracies, making LLMs more reliable for critical applications in fields like medicine and finance. Theoretically, this work progresses our understanding of how external knowledge sources can be dynamically and efficiently leveraged to bolster LLM reasoning, hinting at the potential for LLMs to achieve even greater levels of comprehension and insight through external data sources.

Looking ahead, the adaptability of this scheme to various LLM and KG combinations without extra training costs suggests a broad applicability across many domains and tasks. Future developments may focus on automating the identification and integration of the most relevant KGs based on the task at hand, further enhancing the efficiency and accuracy of LLM reasoning.

Conclusion

In conclusion, this paper proposes a valuable future direction for research into the fusion of KGs and LLMs. By addressing key challenges such as factual inaccuracies and limited transparency in reasoning, the introduced scheme not only enhances the practical utility of LLMs but also contributes to the theoretical understanding of integrating structured external knowledge with generative AI models.