Reasoning on Graphs: Faithful and Interpretable LLM Reasoning

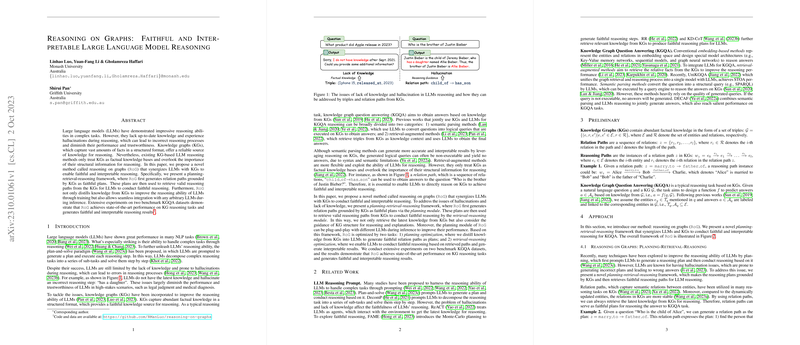

The paper "Reasoning on Graphs: Faithful and Interpretable LLM Reasoning" addresses the limitations of LLMs when applied to reasoning tasks in knowledge-intensive domains. Specifically, it explores a novel approach that combines LLMs with Knowledge Graphs (KGs) to enhance their reasoning capabilities while mitigating common issues such as hallucinations and outdated knowledge.

Key Contributions

The authors propose a method called Reasoning on Graphs (RoG) which integrates LLMs with KGs. The main framework consists of three components: planning, retrieval, and reasoning. This design allows RoG to generate interpretable reasoning paths grounded in the structured data of KGs.

- Planning Module: This component generates relation paths using KGs, providing LLMs with faithful plans that serve as a basis for reasoning. By leveraging structured pathways, the approach curbs hallucinations and offers a more reliable context for the LLMs.

- Retrieval-Reaseoning Module: Following plan generation, RoG retrieves the valid reasoning paths from KGs and uses these for reasoning. The process effectively aligns the reasoning task with the structured information available in the KGs.

- Optimization Framework: The proposed method employs an optimization strategy over the evidence lower bound (ELBO), which refines the balance between planning and retrieval-reasoning tasks. This ensures the generation of accurate and interpretable results.

Experimental Results

The paper reports extensive experiments on two benchmark datasets for Knowledge Graph Question Answering (KGQA): WebQSP and CWQ. RoG surpasses prior state-of-the-art methods by achieving higher Hits@1 and F1 scores. Notably, it demonstrates a significant improvement over existing baseline models, especially in multi-hop reasoning tasks which are indicative of its strong performance on complex queries.

Moreover, RoG introduces flexibility by being compatible with various LLMs during inference, allowing its planning module to boost performance across different architectures. This feature underscores its adaptability and potential for broad applicability in KG-based reasoning tasks.

Implications and Future Work

The integration of LLMs with KGs using RoG opens up novel directions for research in AI reasoning. The framework's ability to perform structured reasoning highlights the importance of combining symbolic representations with machine learning models. Such capabilities are crucial for domains requiring deep, contextual understanding, like legal and medical applications.

Future research may explore scaling this approach to larger, more complex KGs or adapting it to other forms of reasoning beyond KGQA. The authors suggest potential development in enhancing the scalability and efficiency of the framework, potentially leading to more robust, real-world applications.

Overall, "Reasoning on Graphs" presents a well-founded approach to advance LLM reasoning through synergy with KGs, paving the way for more faithful and interpretable AI systems.