Introduction

In the field of artificial intelligence, particularly in the field of computer vision, representation learning is a foundational aspect that involves the transformation of raw data into a format that machines can utilize to perform tasks such as recognizing objects, understanding scenes, and more. The effectiveness of this learning process depends significantly on the diversity and quality of the underlying data.

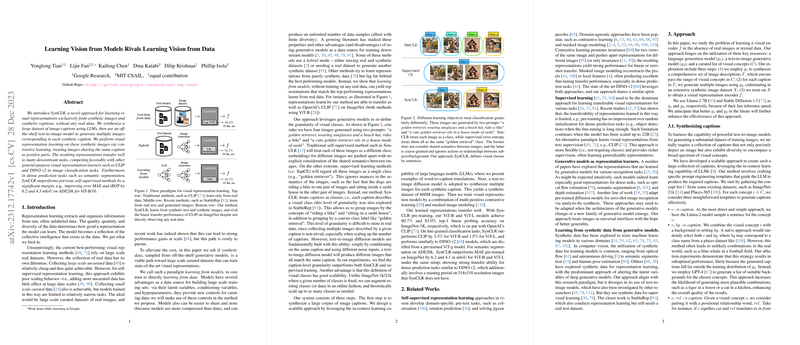

Researchers have historically relied on large, real-world image datasets to train algorithms, but this approach is not without its challenges, including the cost and complexity of data collection and potential scaling inefficiencies. An emerging alternative is to use synthetic image data produced by generative models, which are algorithms trained to create new content that resembles the training data. This strategy is explored through the introduction of SynCLR, a system that leverages generative models to create vast arrays of synthetic images paired with textual descriptions.

SynCLR: Learning from Synthetic Data

SynCLR proposes an approach where visual class definitions are tied to textual captions. By generating textual captions with LLMs, and then converting these captions into images using text-to-image models, SynCLR creates a substantial dataset of visual representations. The key is that all images paired with the same caption are treated as belonging to the same visual class. This strategy allows the grouping of images with shared concepts or themes, contributing to a richer understanding of the visual information than traditional methods.

Impact on Visual Tasks

The SynCLR-trained models demonstrate impressive performance across various visual tasks. They achieve linear classification accuracies on par with that of other leading visual representation learning methods like CLIP and even outperform some self-supervised approaches pre-trained on real data. Beyond image classification, SynCLR extends its capabilities to dense prediction tasks, such as semantic segmentation on ADE20k, presenting strong transfer abilities and rivaling methods that involve higher-resolution training phases or intermediate fine-tuning stages.

Findings and Future Work

SynCLR's success highlights the potential of learning from synthetic data. Its equivalence in performance with models trained on real images suggests that synthetic datasets can be a cost-effective and scalable resource for training visual representations. Looking ahead, further refining the process through which captions are synthesized, exploring different data sampling strategies, and adopting advanced model architectures may unlock even greater performance gains.

The approach exemplified by SynCLR opens a promising direction for visual representation learning, where generative models not only reduce dependence on real-world data collection but also enable more flexible and scalable dataset curation. The exciting outcomes of this research invite continued exploration into the capabilities of synthetic data in the ever-evolving landscape of machine learning.