Overview of "CLIPS: An Enhanced CLIP Framework for Learning with Synthetic Captions"

The paper explores an augmentation of the CLIP (Contrastive Language-Image Pre-training) framework to improve vision-language pretraining through the utilization of synthetic captions. Recognizing the limitations imposed by noisy web-crawled image-text pairs in existing datasets, the authors present CLIPS, a refined approach that leverages synthetic text descriptions generated by Multimodal LLMs (MLLMs).

Key Contributions

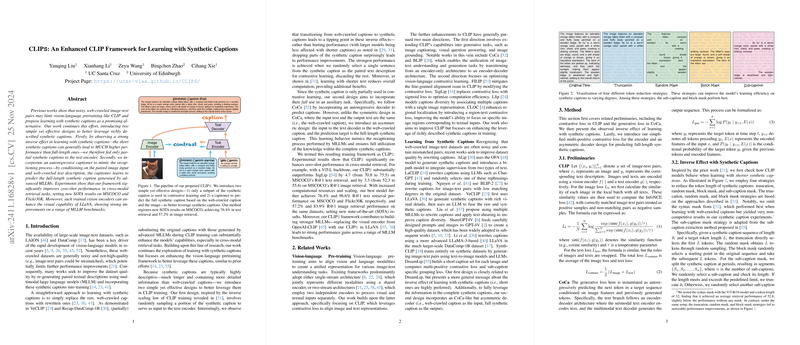

The authors introduce two primary innovations within the CLIPS framework:

- Sub-caption Strategy for Contrastive Learning: The paper identifies a significant inverse effect when utilizing full-length synthetic captions in CLIP training. Specifically, the authors find that shorter synthetic captions outperform their full-length counterparts. Consequently, they employ a strategy where only a portion of these captions is fed into the text encoder for contrastive learning. This adjustment not only boosts model performance but also enhances training efficiency by reducing computational load.

- Generative Utilization of Full Synthetic Captions: The authors propose an autoregressive model that conditions on both the web-crawled text and the corresponding image to predict a full-length synthetic caption. This auxiliary task ensures thorough exploitation of the information embedded in synthetic captions, facilitating improved model understanding and representation learning.

Experimental Results

The enhanced framework demonstrates substantial improvements in zero-shot cross-modal retrieval across benchmarks such as MSCOCO and Flickr30K, setting new state-of-the-art (SOTA) results. For instance, with ViT-L backbone, CLIPS outperformed existing methods by a significant margin (e.g., achieving a R@1 score of 76.4% for text retrieval on MSCOCO).

Moreover, the integration of CLIPS-trained visual encoders into LLaVA—an MLLM framework—demonstrates considerable gains across numerous benchmarks, underscoring the transferability of CLIPS's improved visual representations.

Theoretical and Practical Implications

Theoretical Implications:

The CLIPS framework contributes to the theoretical understanding of vision-LLMing by highlighting the advantages of training with shorter textual inputs and synthetic captions. The observed inverse effect aligns with emerging insights into the scaling laws of multimodal models, where synthetic, concise data can drive superior performance.

Practical Implications:

Practically, this approach suggests a path forward for refining pretraining strategies, particularly in scenarios where data quality and computational resources are constrained. By adopting the proposed partial caption feeding and generative modeling, vision-language systems can achieve enhanced efficiency and effectiveness.

Future Directions

The findings of this paper pave the way for further exploration into the balance between input data length and model performance in the context of multimodal learning. Subsequent research might focus on fine-tuning the generative components of CLIPS or extending its application to other domains where multimodal data is prevalent.

In summary, CLIPS serves as a compelling validation of the benefits of harnessing synthetic data and optimizing data utilization strategies in vision-language domains. By addressing both the quality and structure of training datasets, it offers a robust framework capable of advancing the state-of-the-art in cross-modal representation learning.