Enhancing Visual-LLMs with Synthetic Data Generation

Introduction

The development of Visual-LLMs (VLMs) has been significantly constrained by the limited availability and high costs associated with human-labeled image-caption datasets. In this research, we propose a novel workaround for this bottleneck that leverages the strengths of LLMs and image generation models to efficiently produce synthetic image-text pairs. This approach is demonstrated to facilitate VLM training, offering a new pipeline that generates synthetic datasets with potential for customizable and broad applicability.

Synthetic Data Creation

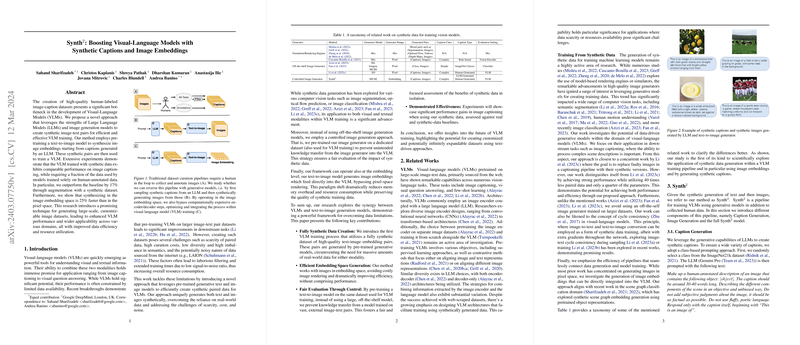

Our method introduces a mechanism for generating both text and images synthetically, negating the dependency on exhaustive real-world data collection. This process employs LLMs to produce captions from specified classes, which then inform the generation of corresponding image embeddings via a pre-trained text-to-image model. Exceptional care is taken to train this image generator on a specific human-annotated image-caption dataset, ensuring that the training of the VLM occurs in a controlled environment without knowledge transfer from extensive, external sources.

Efficiency in Embedding Space

A notable innovation within our approach is the operation within the image embedding space, rather than relying on computationally heavy pixel-space rendering. By aligning the vision encoder of the VLM with the image generator's VQ-GAN backbone, we bypass the decoding and re-encoding steps, significantly streamlining and accelerating the training process without sacrificing performance quality.

Evaluation and Performance

The efficacy of the proposed method is underpinned by comprehensive experiments. When the VLM is trained on a combination of human-annotated and synthetic data, it demonstrates a considerable performance increase over models trained exclusively on human-annotated datasets. More specifically, we observed a 17\% performance improvement through the integration of a synthetic dataset, validating the potential of synthetic data to augment the learning process of VLMs effectively.

Theoretical and Practical Implications

This research not only tackles the practical limitations related to data availability and resource consumption but also opens new vistas for theoretical advancement in VLM training methodologies. The introduction of a workflow that integrates synthetic data generation effectively expands the horizon for creating large-scale, customized image-text pairs, enhancing the model's learning dynamics and applicability across various domains.

Future Prospects in AI

The implications of this paper extend beyond immediate applications in VLM training, proposing a framework that might accelerate advancements across multiple areas within AI. Looking ahead, it invites further exploration into the scalability of synthetic data creation, the potential for bias mitigation in generative models, and the exploration of diverse, domain-specific text sources. This research marks a pivotal step toward realizing the vast potential of generative AI in the effective training of complex models with reduced dependency on large-scale, real-world datasets.

Conclusion

In summation, this paper introduced a groundbreaking approach for enhancing VLM training efficiency and effectiveness through the generation of synthetic data. By leveraging the generative capacities of LLMs and image generation models, it provides a viable solution to the prevailing challenges of data scarcity, high curation costs, and computational inefficiencies. The consequent performance boosts and the promise of customizable, scalable datasets highlight the significant potential of this method to push the boundaries of what's possible in AI research and applications.