Exploring the Evolution of Reasoning with Foundation Models

Introduction to Foundation Models in Reasoning

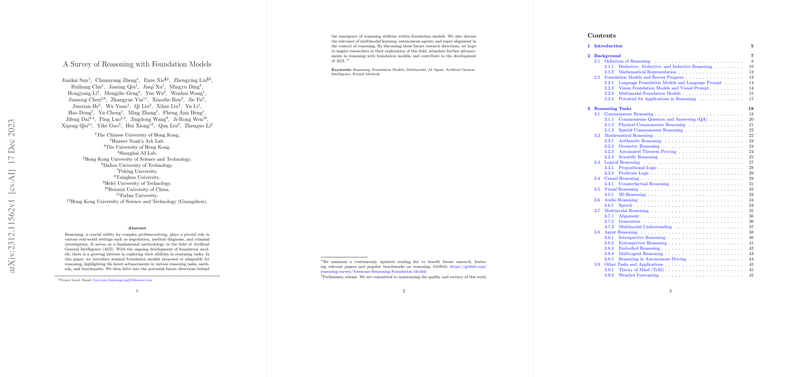

Recent years have witnessed an explosion of interest in Foundation Models (FM) across various domains of artificial intelligence. Among these, a significant focus has been placed on enhancing the reasoning capabilities of LLMs. This exploration into reasoning tasks seeks to unveil the capacities of these models in understanding complex problem-solving, providing a deeper insight into their potential applications in real-world settings. Foundation models, with their extensive pre-training on diverse datasets, offer a promising avenue for advancing reasoning skills crucial for tasks ranging from natural language understanding, question answering, to theorem proving.

Significance of Reasoning in AI

The importance of reasoning extends to the ability of AI systems to process, analyze, and infer knowledge from complex data inputs. Reasoning encompasses a range of tasks including commonsense reasoning, mathematical problem-solving, logical deduction, and multimodal reasoning. Each category presents unique challenges and demands distinct methods for effective processing and output generation.

Advancements in Foundation Model Techniques for Reasoning

Data and Architecture

A critical component fueling the progression of FMs in reasoning is the diversity and volume of data used for pre-training. Text data from various sources, including academic papers and code repositories, enrich the models' knowledge base. Simultaneously, advancements in network architectures, from encoder-decoder frameworks to specialized attention mechanisms, have significantly contributed to the enhancement of reasoning capabilities.

Fine-tuning for Specialization

Fine-tuning approaches, utilizing specialized datasets and employing techniques like adapter tuning and low-rank adaptation, have proven effective in tailoring FMs to specific reasoning tasks. These strategies aim to optimize the models' performance while maintaining efficiency and reducing computational costs.

Alignment Training for Human-like Reasoning

To ensure FMs align closer to human reasoning patterns, alignment training techniques that integrate human preferences and feedback into the training process are being explored. This area addresses the challenge of matching the models' outputs with human expectations and ethical standards.

Potential and Challenges

While foundation models hold vast potential for revolutionizing reasoning tasks in AI, several challenges and limitations persist. Concerns over model interpretability, context length limitations, and the need for enhanced multimodal learning capabilities underline the importance of ongoing research and development in this domain.

Future Directions

The road ahead for foundation models in reasoning calls for a focus on ensuring safety and privacy, advancing interpretability and transparency, and exploring autonomous language agents capable of complex reasoning in dynamic environments. Furthermore, reasoning for science and achieving superintelligence alignment remain pivotal areas for future exploration.

In conclusion, the journey of foundation models in reasoning is marked by rapid advancements, promising potential, and looming challenges. As we continue to push the boundaries of what these models can achieve, a balanced approach that considers the implications and limitations of this technology will be crucial for its responsible and impactful development in AI.