Mathematical Reasoning in the Era of Multimodal LLMs: A Survey

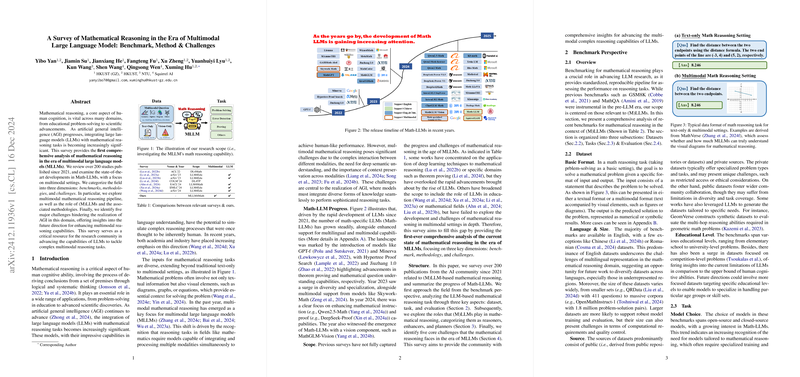

The paper under discussion provides a thorough examination of the integration of mathematical reasoning within the framework of multimodal LLMs (MLLMs). The authors outline a comprehensive survey that synthesizes over 200 studies related to the progression and challenges in mathematical reasoning using MLLMs. This work is segmented into several critical dimensions, including benchmarks, methodologies, and challenges, each of which is explored to assess the current capabilities and limitations of MLLMs in advancing towards artificial general intelligence (AGI).

Overview of Mathematical Reasoning with MLLMs

Mathematical reasoning, an integral facet of human cognition, is pivotal for a variety of domains such as education and scientific discovery. As AGI evolves, the capabilities of LLMs to perform mathematical reasoning are being rigorously explored. This survey distinguishes itself by focusing on multimodal settings, highlighting the potential of MLLMs to process and integrate diverse forms of input beyond traditional text, including visual representations like graphs and diagrams.

The authors of this survey categorize the field into three primary dimensions: benchmarks, methodologies, and challenges, providing a structured lens through which to view the current landscape of MLLM research in mathematical reasoning.

Benchmark Analysis

The benchmark dimension of the survey addresses the datasets, tasks, and evaluation methodologies that form the testing ground for MLLMs. The paper notes a variety of datasets that test mathematical reasoning across different educational and competitive levels, including elementary to university-level and competition-based problems. Notable benchmarks like GSM8K and MATH are highlighted for their role in pre-LLM eras, while new multimodal benchmarks such as MathVista and OlympiadBench have been introduced to evaluate the extended capabilities of MLLMs.

These benchmarks are critical for assessing both discriminative and generative evaluation metrics. Discriminative tasks measure the correctness of outputs, often focusing on classification accuracy, whereas generative tasks evaluate the quality and fidelity of the solutions generated by MLLMs, including how they handle complex reasoning challenges.

Methodological Perspectives

The methodology dimension explores how MLLMs can be employed in the role of a reasoner, enhancer, or planner. As reasoners, MLLMs are fine-tuned on task-specific datasets to simulate human-like reasoning. Enhancers use MLLMs to augment data, enriching the model's training set and improving its performance on mathematical tasks. The planner approach leverages MLLMs to orchestrate the solution of complex problems, coordinating with other models or tools to enhance problem-solving through collaborative interactions.

This multifaceted approach highlights the versatility of MLLMs in engaging with mathematical tasks at various levels of complexity. The paper delineates the strengths and limitations of these methodologies, offering insights into optimization strategies for enhancing MLLMs' reasoning performance.

Challenges and Future Directions

Despite significant advancements, several challenges hinder the realization of AGI through MLLMs in mathematical reasoning. The survey identifies five primary challenges:

- Insufficient Visual Reasoning: Many mathematical problems require extracting and reasoning over complex visual content, which current models find challenging.

- Reasoning Beyond Text and Vision: Real-world mathematical reasoning often necessitates engagement with multiple modalities, such as audio or interactive simulations, which are underrepresented in current benchmarks and methodologies.

- Limited Domain Generalization: Models that excel in specific domains (e.g., algebra, geometry) often do not generalize well across other domains.

- Error Feedback Limitations: Current MLLMs lack robust mechanisms for detecting and correcting various types of reasoning errors.

- Integration with Real-world Educational Needs: There is a gap in aligning MLLMs with the non-linear, iterative nature of human problem-solving often found in educational settings.

Addressing these challenges necessitates a concerted effort towards developing more sophisticated models capable of integrating diverse forms of input, fostering domain generalization, and providing meaningful error feedback. The paper suggests that future research should aim to build more comprehensive multimodal datasets, improve model architectures for cross-domain reasoning, and integrate real-world problem-solving workflows.

Conclusion

In summary, this survey provides a critical resource for the research community by documenting the current state and future directions for MLLMs in mathematical reasoning. Through an in-depth analysis of benchmarks, methodologies, and challenges, it offers a roadmap for enhancing the capabilities of LLMs to tackle complex reasoning tasks. The insights garnered from this survey could significantly influence the development of AGI systems with improved mathematical reasoning capabilities, ultimately contributing to a wider array of applications in education, science, and beyond.