Unified Vision-Language Pre-Training for Image Captioning and VQA: An Expert Overview

Introduction

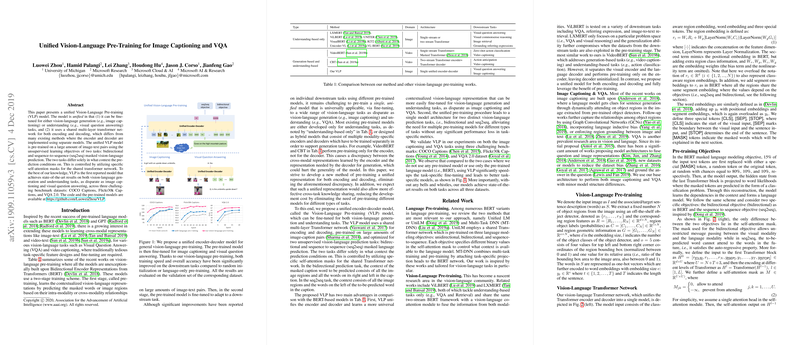

The paper "Unified Vision-Language Pre-Training for Image Captioning and VQA," authored by Luowei Zhou et al., introduces a novel Vision-Language Pre-training (VLP) model designed to address tasks within both vision-language generation and understanding realms, namely image captioning and Visual Question Answering (VQA). This research builds upon the momentum generated by prominent LLMs such as BERT and GPT, expanding their principles to create a unified model capable of handling multimodal inputs.

Model Architecture and Pre-training Strategy

The core innovation of this research lies in its unified encoder-decoder architecture based on Transformers. Unlike traditional models that employ distinct networks for encoding and decoding, the VLP model leverages a shared multi-layer transformer network. This enables the seamless transition from pre-training to fine-tuning across diverse tasks.

The pre-training phase utilizes large datasets of image-text pairs, employing two unsupervised learning objectives: bidirectional and sequence-to-sequence (seq2seq) masked vision-language prediction. These objectives are implemented through specific self-attention masks within the transformer, controlling the context considered for each prediction. The bidirectional task predicts masked caption words using all surrounding words and image regions, while seq2seq utilizes a left-to-right context.

Evaluation and Benchmarking

The effectiveness of the VLP model is substantiated through rigorous experiments on several major benchmarks: COCO Captions, Flickr30k Captions, and VQA 2.0. The VLP model attains state-of-the-art performance metrics across all these datasets for both image captioning and VQA tasks.

- COCO Captions: Achieved BLEU@4 score of 36.5, demonstrating significant efficacy in generating accurate captions.

- Flickr30k Captions: Notably, the model achieved a CIDEr score of 68.5 with seq2seq pre-training only.

- VQA 2.0: Reached an overall accuracy of 72.5% with a strong performance across different types of questions.

Comparative Analysis

The paper includes a comparative analysis with other contemporary vision-language pre-training models such as ViLBERT, LXMERT, and VideoBERT. The unified model presents two major advantages over these existing models:

- Unified Representation: By employing a single encoder-decoder network, VLP facilitates learning a universal vision-language representation, making it easier to fine-tune for varied tasks.

- Cross-task Knowledge Sharing: The unified pre-training inherently supports effective cross-task knowledge interchange, potentially reducing development costs and overhead associated with training multiple models.

Practical and Theoretical Implications

The practical implications of this research are profound. The unified VLP model substantially reduces the computational cost and complexity involved in pre-training and fine-tuning disparate vision-LLMs. This approach also has the potential to improve downstream task accuracy and efficiency, evidenced by the faster convergence during the fine-tuning phase and superior performance metrics.

From a theoretical perspective, the integration of bidirectional and seq2seq pre-training objectives challenges the conventional separation of understanding and generation tasks. This unified method highlights the potential for developing generalized models that can adapt to a wider array of tasks without significant performance trade-offs, setting a new paradigm in multimodal learning.

Future Directions

While the unified VLP model has made significant strides, there remain several avenues for future exploration. Expanding this model to cover more complex multimodal tasks such as visual dialogue and text-image grounding could demonstrate its capabilities further. Additionally, investigating the impact of multi-task fine-tuning may uncover strategies to mitigate any interference between different objectives in a unified framework, providing more robust generalization capabilities.

Conclusion

In summary, this research presents a compelling approach to vision-language pre-training, leveraging a unified transformer network to elegantly bridge the gap between vision-language generation and understanding tasks. By achieving state-of-the-art results across diverse benchmarks, the VLP model underscores the value of a shared representation and pre-training methodology that could serve as a foundation for future advancements in the field of AI and multimodal learning.