Improving In-Context Learning in Diffusion Models with Visual Context-Modulated Prompts

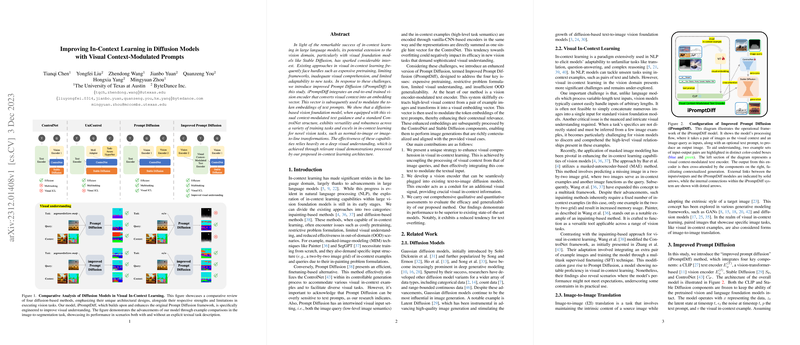

This paper, authored by Tianqi Chen et al., presents a novel approach to enhancing in-context learning for diffusion models within the vision domain. The method, named Improved Prompt Diffusion (iPromptDiff), significantly advances the capabilities of visual foundation models like Stable Diffusion by addressing several key challenges inherent in visual in-context learning. These include high pretraining costs, restrictive problem formulations, limited visual comprehension, and difficulties in adapting to new tasks.

Key Contributions

The primary contributions of this research are as follows:

- Decoupled Processing for Visual Context: The authors address the inefficiency in previous methods that encode image queries and visual examples in the same manner. In contrast,

iPromptDiffleverages a vision-transformer-based (ViT) encoder to extract high-level visual contexts from example images separately from the image query processing. This enables a more nuanced and sophisticated understanding of the tasks at hand. - Visual Context-Modulated Text Prompts: By introducing a special visual context placeholder token,

iPromptDiffdynamically incorporates visual context into text embeddings, effectively fusing linguistic and visual guidance. This approach mitigates information conflicts and enhances the contextual relevance of text prompts in guiding image generation. - Enhanced Versatility and Robustness: The method demonstrates strong performance across a variety of vision tasks, including novel tasks that the model was not explicitly trained for. This is achieved through effective multitask training and strategic use of visual-context modulation.

Experimental Validation

The authors provide extensive qualitative and quantitative evaluations to demonstrate the efficacy of iPromptDiff.

In-Domain Map-to-Image Tasks

For in-domain tasks like depth-to-image, HED-to-image, and segmentation-to-image, iPromptDiff models—both trained on the Instruct Pixel-to-Pixel (iPromptDiff-IP2P) dataset and the larger MultiGen-20M dataset—outperform existing benchmarks. Table \ref{tab:fid} in the paper reports significantly lower Frechet Inception Distance (FID) scores for iPromptDiff variants compared to Prompt Diffusion and ControlNet, indicating superior image generation quality.

Out-of-Domain Map-to-Image Tasks

In novel tasks like normal-to-image and canny-to-image, iPromptDiff maintains robust performance even when text prompts are absent (denoted as 'n/a'), unlike Prompt Diffusion, which struggles under these conditions. This finding underscores the model's reliance on visual context, making it more adaptable to unseen tasks.

Image-to-Map Tasks

The reverse transformation tasks—image-to-depth, image-to-HED, and image-to-segmentation—pose greater challenges due to the less straightforward nature of the task and the inherent difficulties in visual foundation models that are not pretrained on condition map data. iPromptDiff manages to match or exceed the performance of specialized models like ControlNet, especially when text prompts are omitted during evaluation, further proving its versatility.

Implications and Future Directions

The iPromptDiff framework offers substantial improvements in both theoretical and practical aspects of visual in-context learning.

Theoretical Implications:

- The decoupled processing and dynamic integration of visual context represent significant advancements in the methodology of visual understanding.

- The robustness of vision transformers over CNN-based encoders in handling high-level semantic content showcases their potential for more sophisticated visual tasks.

Practical Implications:

- The ability to perform well in novel tasks without extensive retraining suggests practical applications where flexibility and adaptability are vital.

- The potential to enhance foundational vision models like Stable Diffusion means broader and more effective deployment in various image generation and transformation tasks.

Future Developments:

- Extending the work to include multiple visual in-context examples could significantly improve model performance. Techniques like averaging embeddings or employing the perceiver resampler could be explored.

- Investigating the impact of intelligent example selection mechanisms on in-context learning performance could offer substantial improvements.

Conclusion

The introduction of Improved Prompt Diffusion (iPromptDiff) marks a significant step forward in the quest to improve visual in-context learning with diffusion models. By innovatively addressing existing limitations through advanced visual context processing and dynamic multimodal fusion, this research offers a robust framework for future developments in the field. The evidence from comprehensive experiments suggests broad applicability, potentially transforming how visual in-context tasks are approached and solved.