TaskBench: Benchmarking LLMs for Task Automation

The paper introduces TaskBench, a benchmark designed to evaluate the capabilities of LLMs in the context of task automation. This work addresses the current gap in systematic benchmarking for LLM-driven task automation, focusing on evaluating these models across three critical dimensions: task decomposition, tool invocation, and parameter prediction.

Key Concepts and Contributions

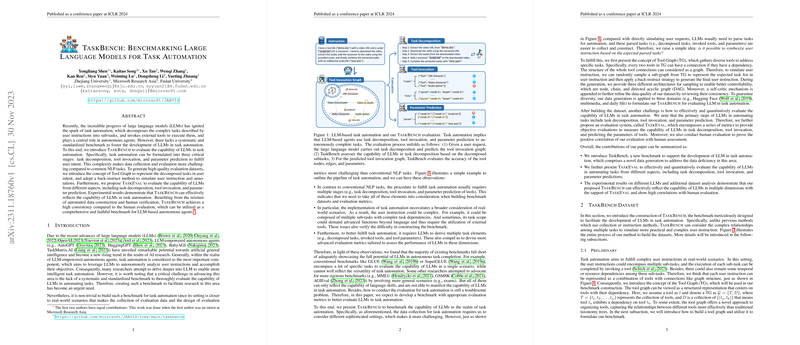

- Task Automation Context: With advancements in LLMs, autonomous agents have shown promise in task automation. The paper categorizes this into three stages: breaking down a user's instructions, invoking the correct tools, and predicting necessary parameters to execute tasks successfully. Recognizing the complexity and lack of standardized benchmarks in this area, the authors present TaskBench.

- Tool Graph and Back-Instruct Method: A novel aspect of the paper is the introduction of the Tool Graph (TG), a representation method wherein tools are nodes connected by dependencies represented as edges. From TG, the authors derive user instructions using a 'back-instruct' methodology. This involves sampling sub-graphs from TG and synthesizing user prompts backward from these, ensuring the prompts reflect real-world task complexities.

- TaskEval Metrics: To evaluate LLM capabilities, the authors introduce TaskEval, a suite of metrics addressing different facets of task automation. These metrics objectively measure how well LLMs perform task decomposition, tool invocation, and parameter prediction.

- Experimental Validation: The paper presents experimental validation across multiple LLMs, including GPT variations and several open-source models. Results confirm that TaskBench effectively captures the task automation capabilities of these models, offering insights into their strengths and areas for improvement.

Experimental Findings

- Task Decomposition: The models' ability to break down tasks reflects varying capabilities, with GPT-4 demonstrating superior performance compared to other models, an outcome likely tied to its advanced reasoning capabilities.

- Tool Invocation Performance: Node prediction and edge prediction were found to vary significantly, with edge prediction generally being more challenging. This highlights the complexity of understanding dependencies between tasks.

- Parameter Prediction: Predicting the correct parameters for tool execution remains a demanding task, with GPT-4 showing the highest accuracy, thus underscoring the challenge LLMs face in understanding detailed, context-specific requirements.

Implications and Future Directions

TaskBench represents a comprehensive benchmarking framework that fills a crucial gap in the evaluation of LLMs concerning task automation. The implications are multifold:

- Practical Impact: By offering a method to evaluate LLM performance in complex, real-world task scenarios, TaskBench can guide developers in optimizing models for practical applications in autonomous systems.

- Theoretical Insights: The approach can inspire further research into enhancing tool invocation strategies within LLMs, potentially leading to more nuanced models capable of intricate task executions.

- Future Developments: The authors suggest extending TaskBench to cover more domains and refining the evaluation criteria, which could lead to even broader applicability and insights into LLM capabilities.

Conclusion

The TaskBench benchmark provides a structured and effective approach to assessing LLMs in task automation contexts. By dissecting and comprehensively evaluating how these models handle task decomposition, tool invocation, and parameter prediction, this work lays the groundwork for future innovations and improvements in the field of autonomous systems. With its novel data generation methodology and rigorous evaluation metrics, TaskBench is positioned as a significant contribution to AI research, particularly in enhancing the practical applicability of LLMs.