Introduction

Generative LLMs have rapidly become a foundational aspect of modern artificial intelligence research and deployment. Utilizing these models effectively and efficiently during the inference stage is a challenge that has garnered significant attention, particularly given the implications on computational resources. In the paper "Splitwise: Efficient Generative LLM Inference Using Phase Splitting," a novel approach to address these challenges is presented, employing a technique called 'Splitwise' to enhance LLM inference clusters in terms of throughput, cost, and power objectives.

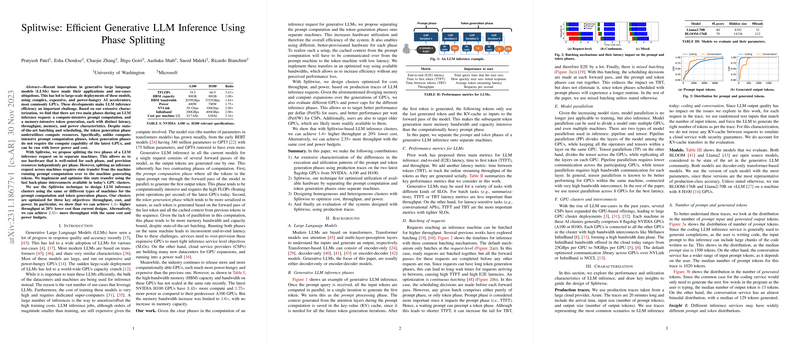

Phase Splitting in LLM Inference

One of the core insights from the paper is the identification of two distinct phases during LLM inference: prompt computation and token generation. Prompt computation is a compute-intensive phase requiring high-floating point calculations. Conversely, token generation is a memory-bound phase, involving serialized computation where each new token depends on previously generated tokens and cached context. Current models underutilize computing resources during the token generation phase; this is where Splitwise comes into play. It proposes offloading prompt computation and token generation onto separate machines, allowing each phase to leverage hardware that is optimally suited to its computational profile. This separation requires efficient state transfer—specifically the model's key-value cache—from the prompt-computing to token-generating machine, optimized through the use of high-speed networking available in GPU clusters.

Splitwise: Design and Optimization

The paper introduces the design of LLM inference clusters reinforced by Splitwise's phase-splitting technique, accounting for throughput, cost, and power consumption. Three key designs are proposed, assessing homogeneous and heterogeneous clusters with different GPU combinations, reflecting scenarios where the prompt phase is powered by high-performance GPUs and the token generation phase is supported by hardware optimized for memory bandwidth and capacity. Benchmark results indicate that the Splitwise-based designs can attain up to 1.4 times higher throughput for 20% lower cost compared to conventional designs, or alternatively, a 2.35 times increase in throughput within the same cost and power envelope. These enhancements result from targeting the distinct requirements of each inference phase, pushing the efficiency boundaries of LLM deployment.

Cluster Provisioning and Scalability

The provisioning methodology under Splitwise is thoroughly outlined, accommodating different models and workloads, and varying service level objectives (SLOs). The paper examines numerous cluster configurations, assuring SLO compliance across different percentile benchmarks for latencies (end-to-end, time to first token, and time between tokens). Splitwise's design is shown to be both malleable depending on the workload and robust to model variations or load fluctuations, suggesting significant potential for real-world applicability. Further discussions and relatable work acknowledge the potential for innovation in both hardware, fitted for prompt and token phases, and scheduling strategies on heterogeneous platforms.

In summary, "Splitwise: Efficient Generative LLM Inference Using Phase Splitting" offers a practical approach to optimizing the deployment of generative LLMs, achieving higher efficiency and throughput while balancing cost and power constraints. The presented technique and findings are anticipated to be crucial for the AI community as it leans towards more scalable and efficient use of LLMs in numerous applications.