Efficient LLM Inference with Attention Offloading

Introduction

Transformer-based LLMs like those used in GPT and BERT architectures have been phenomenal in NLP tasks, from chatbots to advanced code completion tools. Yet, their extensive computational demands, particularly during the inference phase, pose significant challenges, especially in cost and efficiency when deployed at scale. Recently, a new approach known as attention offloading has been proposed to address these challenges by optimizing the way computational resources are used during LLM inference.

The Issue at Hand

In typical setups, executing an LLM inference task involves specialized, high-performance accelerators such as NVIDIA's A100 or TPU units. While these units are great at handling heavy computational tasks, they are quite expensive and may not always be utilized efficiently throughout the LLM inference process. This inefficiency is most apparent during the so-called 'attention operations'—a component of the LLM that is particularly memory-intensive and less about brute computational force.

To give a clearer picture, most of the modern accelerators bundle heavy computational resources with high-speed memory (High Bandwidth Memory, HBM). However, the unique demands of attention operations in LLMs (especially during the token generation phase in tasks like conversing with a chatbot or generating code) do not align perfectly with this setup. This mismatch comes down to attention needing more memory bandwidth rather than sheer computational power, which can lead to situations where these costly accelerators are not fully utilized.

Enter Attention Offloading

The core idea here is straightforward yet ingenious: separate the memory-intensive tasks from the purely computational ones by using two distinct sets of devices. This approach uses cheaper, memory-optimized devices for the attention component, while reserving the powerful, expensive accelerators for other computational tasks within the LLM workflow.

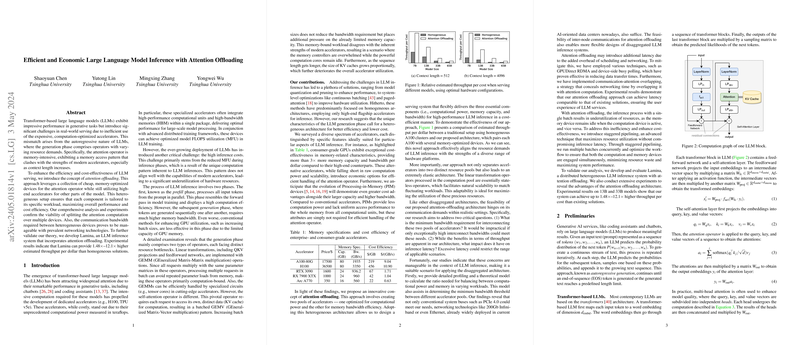

Why does this matter? By targeting specific operations to the most suitable hardware, it's possible to not only boost the efficiency of the system (in terms of throughput per dollar spent) but also optimize the overall utilization of costly computational resources. Researchers demonstrated that this setup could lead to an estimated throughput per dollar improvement ranging from 1.48 to over 12 times compared to traditional, non-offloaded systems.

Practical Considerations and Results

The implementation of this method isn't without its hurdles. Key challenges include managing communications between heterogeneous devices effectively since some data need to transit between the memory-focused and compute-focused devices. The balance here is critical: too much communication overhead could negate the benefits of offloading.

In practical testing scenarios using LLMs up to 33 billion parameters in size, the offloading approach proved highly effective, managing communications using existing networking technologies without the requirement for extraordinary bandwidth options. This suggests that deploying such a system in current data center environments is feasible.

In terms of actual performance boosts, when utilizing memory-optimized devices in conjunction with high-end computational units, researchers observed significant enhancements in handling larger batch sizes without a drop in processing speed. This capability directly translates into better handling of simultaneous user requests in real-world applications, such as managing multiple queries to a chatbot or code assistant.

Future Directions and Implications

This research not only provides a compelling method to reduce the costs associated with LLM inference but also opens the door to more specialized uses of hardware in the field of AI and machine learning. As hardware technology evolves and more specialized units enter the market, the principles demonstrated here could guide more nuanced approaches to system architecture in AI deployments. Furthermore, as models continue to grow in size and complexity, innovations like attention offloading will be crucial for maintaining and improving the accessibility and sustainability of AI technologies.

In conclusion, attention offloading represents a practical and impactful advancement in optimizing LLM inference tasks. It's a step forward in marrying the strengths of different technologies to not just do more with less, but to do it better and cheaper.