FlexGen: High-Throughput Generative Inference of LLMs with a Single GPU

The paper investigates FlexGen, a sophisticated framework designed to optimize generative inference of LLMs, particularly under constraints of limited computational resources such as a single GPU. Given the expansive computational and memory demands inherent to LLMs, FlexGen represents a strategic response to facilitate efficient inference without requiring multiple high-end accelerators.

Key Contributions

- Offloading Framework: FlexGen capitalizes on an offloading strategy that integrates computational capacities across GPU, CPU, and disk. By formulating the inference process as a graph traversal problem, FlexGen identifies optimal storage and compute strategies to minimize execution time. Emphasizing throughput-oriented workloads, FlexGen utilizes a zig-zag block computation schedule. This approach enables substantial weight reuse, thus enhancing I/O efficiency and supporting larger batch sizes.

- Quantization and Compression: One of the key innovations lies in compressing both model weights and the KV cache to 4-bit quantized formats. This compression achieves significant reductions in memory usage and I/O operations while maintaining an accuracy that is nearly equivalent to FP16 implementations. This strategy aligns with FlexGen’s goal to maximize batch size and throughput on commodity hardware.

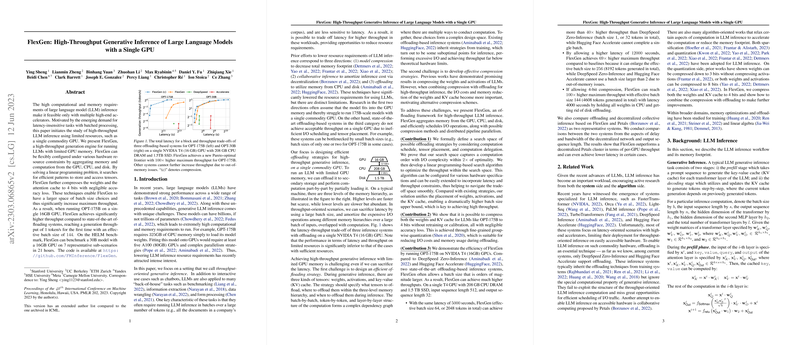

- Performance Benchmarking: The performance of FlexGen is empirically validated through rigorous comparisons with prevailing systems like DeepSpeed ZeRO-Inference and Hugging Face Accelerate. When deployed on a single T4 GPU with constrained RAM and SSD capacity, FlexGen demonstrated its capability to execute inference on the OPT-175B model with unprecedented throughput, significantly outmatching existing offloading solutions. FlexGen achieved a throughput of 1 token per second using a 16GB GPU, showcasing a potential throughput increase of approximately 100 times relative to baseline systems.

Theoretical and Practical Implications

FlexGen’s methodologies elucidate a clear path forward in leveraging multi-tiered memory systems for LLM inference. By amalgamating memory resources across devices via optimized scheduling and compression techniques, FlexGen addresses both computational and memory bottlenecks effectively. The practical upshot is a scalable inference framework that reduces reliance on premium hardware without sacrificing performance.

Moreover, this approach offers substantial theoretical implications for future AI systems concerning the balance between hardware utilization and application-level throughput. By supporting latency-insensitive, batch processing tasks, FlexGen aligns well with real-world AI deployments that prioritize throughput over individual request latency—such as document processing and model benchmarking.

Future Directions

Building upon its foundations, future developments could explore integrating FlexGen with decentralized inference approaches, balancing between collaborative computing and offloading. Additionally, enhanced scheduling algorithms could be further refined to achieve greater efficiency as hardware architectures evolve.

In conclusion, FlexGen’s strategies provide a striking advancement in optimizing LLM inference within constrained environments. By addressing both theoretical complexities and practical deployment challenges, FlexGen sets a new benchmark for high-throughput AI inference systems. This work not only broadens the applicability of LLMs but also invites further exploration into efficient computation techniques in AI research.