MobileCLIP: Efficient Image-Text Models via Multi-Modal Reinforced Training

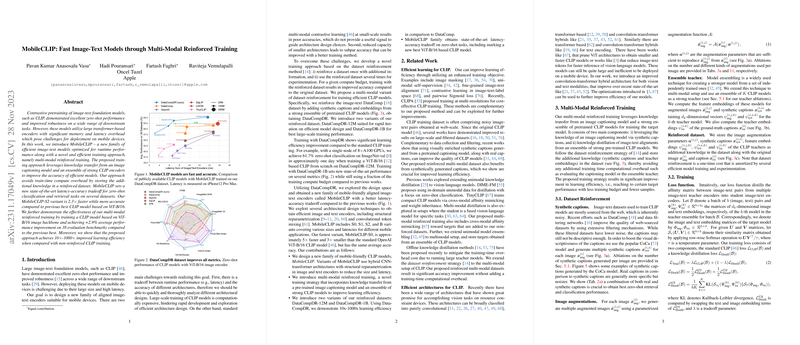

This paper introduces MobileCLIP, an efficient family of image-text models designed explicitly for deployment on mobile devices. Traditional models like CLIP excel in zero-shot performance across various downstream tasks but are typically constrained by considerable memory and latency overhead. To address these limitations, MobileCLIP integrates a new multi-modal reinforced training approach that significantly improves the latency-accuracy tradeoff.

Key Contributions

- Efficient Models: MobileCLIP models employ hybrid CNN-transformer architectures. This combination leverages the strengths of convolutional networks for quick image processing and transformers for robust textual understanding. Techniques like structural reparameterization are applied to reduce model size and latency further.

- Multi-Modal Reinforced Training: The innovative training approach utilizes knowledge transfer from both a pre-trained image captioning model (CoCa) and an ensemble of strong CLIP encoders. Key strategies include storing additional knowledge as synthetic captions and embedding from teachers into a reinforced dataset (DataCompDR), facilitating efficient knowledge transfer without significant train-time compute overhead.

Numerical Results and Analysis

- Latency and Accuracy: The MobileCLIP-S2 variant achieves a 2.3x speed improvement over previous state-of-the-art models while maintaining superior accuracy. Specifically, latency on iPhone12 Pro Max is reported to be significantly lower (1.5 ms for MobileCLIP-S0 versus 5.9 ms for ViT-B/32 CLIP). MobileCLIP-S0 is 5x faster and 3x smaller than the baseline ViT-B/16 CLIP model, but it retains comparable zero-shot accuracy.

- Learning Efficiency: The proposed training approach demonstrates a 10x-1000x improvement in learning efficiency compared to standard contrastive learning. Training with the DataCompDR-1B set allows state-of-the-art performance on several benchmarks using a fraction of the computational resources traditionally required.

Architectural and Training Insights

- Reinforced Dataset: The DataCompDR-1B dataset is created by reinforcing the DataComp-1B dataset with additional information, including synthetic captions and feature embeddings from an ensemble of strong CLIP teachers. This one-time cost in dataset reinforcement leads to substantial performance gains across multiple training sessions and configurations.

- Hybrid Text Encoder: The text encoder integrates convolutional 1-D layers with self-attention mechanisms to offer a balanced tradeoff between efficiency and performance. Specifically, the Text-RepMixer, inspired by convolutional token mixing, replaces some self-attention layers and improves latency without sacrificing accuracy significantly.

Implications and Future Directions

- On-Device Inference: MobileCLIP models set a new benchmark for efficient image-text model deployment on mobile devices. The architectural innovations pave the way for more practical applications where power and speed constraints are critical.

- Scalability: The substantial improvements in learning efficiency and state-of-the-art performance on multiple benchmarks suggest that the concepts introduced in this paper can be scaled further. Exploring additional datasets and even more efficient architectures could yield models suitable for even more constrained environments.

- Model Distillation: The multi-modal reinforced training strategy opens avenues for further research into distillation techniques for multi-modal models. Extending this framework to other modalities or even incorporating more complex ensembling strategies could offer promising developments in the field.

Conclusion

MobileCLIP represents a significant advancement in the development of efficient image-text models. By introducing structural reparameterization, hybrid architectures, and a novel training strategy, this paper establishes a new state-of-the-art for models deployed in resource-constrained environments. The methods and findings outlined here are likely to influence future research directions significantly, emphasizing the need for efficient, scalable, and robust multi-modal learning approaches.

This concise overview of the MobileCLIP paper highlights its main contributions, numerical results, and implications for future research, maintaining a professional, academic tone suitable for expert readers.