Improved Training Techniques for CLIP at Scale

The paper presents a detailed paper on enhancing the efficiency and performance of CLIP (Contrastive Language-Image Pre-training) models. The authors introduce a set of models designed to improve training dynamics while minimizing computational costs. By implementing innovative strategies such as representation learning optimizations, the use of LAMB optimizer, and novel augmentation techniques, the research aims to achieve superior results with reduced resource expenditure.

Key Contributions

The paper emphasizes several pivotal contributions:

- Efficient Training Techniques: The proposed methods include the initialization of CLIP with pre-trained EVA representations. This incorporation leverages the strengths of both contrastive learning and masked image modeling to stabilize and expedite training.

- Optimization Strategy: The adoption of the LAMB optimizer, known for its scalability in large-batch training, significantly enhances training efficiency. This optimizer improves convergence rates and is adept at managing the large batch sizes required for CLIP models.

- Augmentation via FLIP: The integration of FLIP, involving the random dropping of input tokens, reduces the computational load by half while maintaining performance. This strategy enables larger batch sizes without memory overhead.

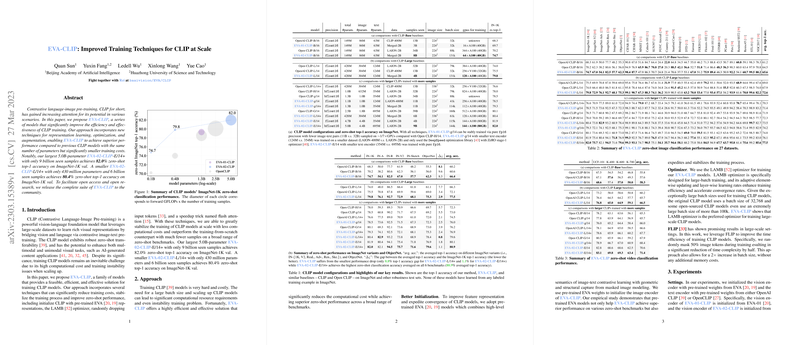

- Performance Evaluation: A noteworthy result is achieved with the largest model (5.0B parameters), which attains an 82.0% zero-shot top-1 accuracy on ImageNet-1K with only 9 billion seen samples. A smaller model with 430 million parameters achieves 80.4% accuracy with just 6 billion samples.

Experimental Insights

The experimental section provides extensive evaluations across multiple zero-shot benchmarks, including ImageNet variants and ObjectNet. The models consistently deliver robust performance, outperforming counterparts trained from scratch with larger datasets. The ablation studies highlight the effectiveness of each component, underscoring the significant boost provided by EVA-based initialization and the LAMB optimizer when combined with FLIP strategies.

Notably, the -E/14+ model attains the highest average zero-shot classification accuracy across six benchmarks, achieving improvements over OpenCLIP baselines.

Practical and Theoretical Implications

The methodologies proposed in this research have profound implications for the training process of large-scale vision-LLMs. Practically, the reduction in training costs and computational requirements addresses a critical barrier in the widespread adoption of CLIP-like architectures. Theoretically, it contributes to the understanding of efficient multimodal model scaling and the transferability of pretrained representations.

Future Directions

The paper opens several avenues for future research. Exploring further optimizations in token dropping techniques and examining the potential of alternative pre-training models could yield additional insights. Additionally, refining the training process for specific downstream applications may enhance the adaptability and specificity of CLIP models.

In conclusion, this paper offers a comprehensive examination of strategies to refine CLIP model training, demonstrating substantial improvements in performance metrics while reducing resource demands. These advancements are pivotal to the evolution of efficient multimodal learning systems, providing a robust framework for ongoing research and application in artificial intelligence.