CatLIP: Achieving CLIP-Level Accuracy with Significant Training Speed Improvements

Introduction

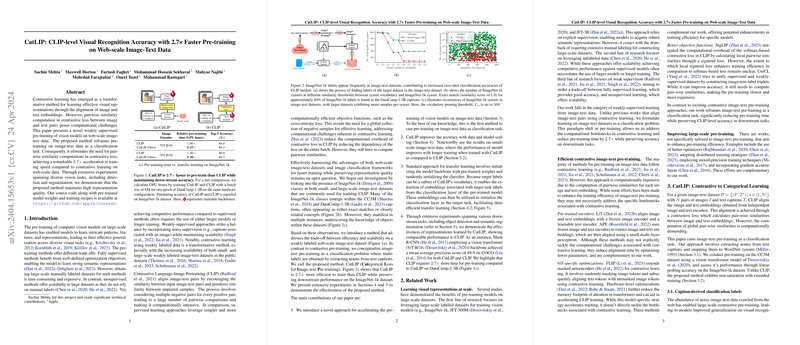

The paper introduces CatLIP, a novel approach designed to enhance the pre-training speed of vision models on web-scale image-text data while maintaining competitive accuracy levels compared to the CLIP model. This method conceptualizes pre-training as a classification task rather than the traditional contrastive learning approach, effectively sidestepping the computational demands typical of the latter. This shift not only retains high representation quality but also achieves a remarkable reduction in training time.

Methodology

Contrastive versus Categorical Learning

CatLIP modifies the typical image-text pair alignment of CLIP, which uses contrastive loss that necessitates extensive pairwise similarity computations, a notable computational burden. Instead, CatLIP approaches this by extracting labels from text captions (specifically, nouns mapped to WordNet synsets) and treating the problem as a multi-label classification task with binary cross-entropy loss. Such reframing significantly alleviates the computational overhead by bypassing the need for pairwise comparisons.

Efficiency Demonstrated: The experiments reveal that CatLIP reduces the pre-training time by a factor of 2.7x compared to CLIP while preserving downstream task accuracies.

Data and Model Scaling

The approach was evaluated across various scales of data and model complexities:

- Data Scaling: Increasing the dataset size improved transfer learning accuracy, highlighting CatLIP's effectiveness across different dataset magnitudes.

- Model Scaling: Larger models under CatLIP training showed enhanced representation quality, affirming the scalability of this approach.

Transfer Initiatives: CatLIP benefits transfer learning by leveraging pre-trained embeddings for initializing classification layers in target tasks, which is particularly effective when target labels closely relate to the pre-training labels.

Comparative Analysis

CatLIP exhibits competitive performance against not only CLIP but also other state-of-the-art models. This includes achieving comparable or better results in standard benchmarks like ImageNet-1k and Places365 without the computational expense and time required by traditional contrastive learning methods.

Task Generalization

Generalization to Complex Tasks

CatLIP's robustness was further tested across more complex visual tasks:

- Multi-Label Classification, Semantic Segmentation, and Object Detection: CatLIP maintained competitive performance across these tasks, demonstrating its capability to generalize well beyond simple image classification tasks.

Conclusion

The introduction of CatLIP presents a significant advancement in the pre-training of vision models using image-text data. By transforming the training approach to leverage categorical loss, this method not only accelerates training but also ensures the preservation of high-quality representation capabilities. This work opens new avenues for research into efficient training methods on large-scale datasets and holds promising implications for both theoretical and practical advancements in machine learning and computer vision domains.