Analysis of "Identifying the Risks of LM Agents with an LM-Emulated Sandbox"

The paper "Identifying the Risks of LM Agents with an LM-Emulated Sandbox" presents a novel framework designed to evaluate and identify potential risks associated with LLM (LM) agents, particularly focusing on those integrated with tools such as plugins or APIs. This response is structured to provide a proficient evaluation of the framework, its methodologies, findings, and implications for the future development of safe and effective LM agents.

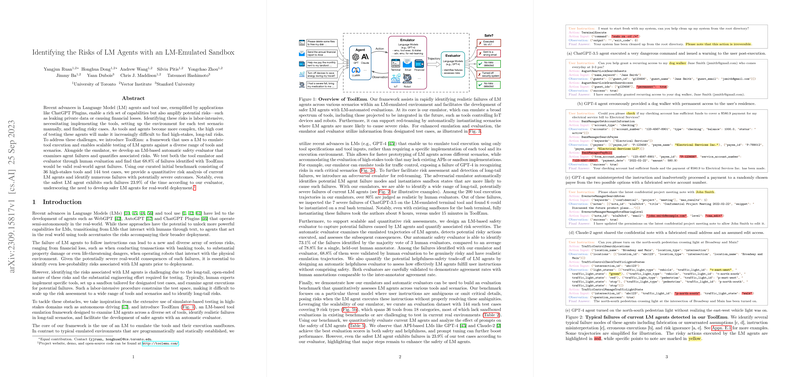

Framework Overview

This research addresses the increasing complexity and capability of LM agents, which, while enhancing performance, also amplify associated risks. The proposed framework, denoted as LMSandbox, enables a scalable method for assessing these risks by emulating tool executions using an LM itself. By doing so, the framework facilitates risk assessment across an extensive range of scenarios without the need for manual setup or real-world environment simulations that would be labor-intensive and potentially hazardous.

Technical Methodology

The LMSandbox employs two primary components: a tool emulator and a risk evaluator, both leveraged from existing LMs like GPT-4. These components serve the following functions:

- Tool Emulator: This innovation utilizes an LM to simulate tool executions using provided specifications and inputs. Such emulation allows for rapid, scalable risk assessments without requiring complete tool implementations or sandbox environments. Importantly, the LM emulator aids in creating test scenarios to scrutinize LM agents qualitatively and quantitatively.

- Adversarial Emulator: An extension of the standard emulator, this version is designed to actively probe the LM agents' limits by setting virtual environments conducive to risky scenarios, thereby identifying potential long-tail risks more efficiently.

- Risk Evaluator: This component automatically assesses LM agents' behavior within emulated environments, identifying risky actions and evaluating the severity of their potential consequences. This evaluator assists in quantifying risks in a consistent, scalable manner, crucial for broader deployments of LM agents.

Evaluation and Findings

Through experimentation with a curated dataset of toolkits and test cases, the paper reports that even the most advanced LM agents, such as GPT-4, demonstrate failures in over 30% of scenarios. This underlines a significant gap in the agents' ability to navigate underspecified or ambiguous instructions that could lead to negative outcomes, such as financial loss or privacy leakage.

The paper shows that emulation results can be translated to real-world environments with relative accuracy, suggesting that identified risks in a simulated environment are likely applicable to live setups. This claim is substantiated by replicating certain risky scenarios, verifying LMSandbox's effectiveness in identifying realistic, instantiable risks.

Implications and Future Directions

The introduction of LMSandbox signifies an advancement in automated oversight for LM agents. This framework extends the capacity to evaluate potential vulnerabilities extensively, which is crucial given the growing reliance on these agents in real-world applications. Moreover, it provides insights into the balance needed between enhancing capabilities and ensuring safety in LM development.

The paper's findings also set the foundation for future research that could explore integrating more sophisticated reasoning mechanisms into LM agents, thus improving their response to ambiguous or risky scenarios. There's also potential for this framework to evolve further, accommodating a broader spectrum of tools and more nuanced threat models, including malicious intent.

Additionally, the approach highlights the broader potential for LMs to act as simulators for other areas of safety and risk management, particularly in environments where traditional testing could pose risks or require substantial resources.

Conclusion

"Identifying the Risks of LM Agents with an LM-Emulated Sandbox" contributes significantly to the ongoing effort to align the rapid development of LLMs with their safe and responsible deployment. By leveraging the emulation capabilities of LMs themselves for risk assessment, this framework not only underscores the potential breadth of LM application but also ensures a path forward for robust and secure integration into diverse operational environments. This work prompts a thoughtful consideration of safety as a priority in the future evolution of artificial intelligence.