Red Teaming LLMs with LLMs

The paper, titled "Red Teaming LLMs with LLMs," authored by Ethan Perez et al., explores a novel approach for identifying harmful behaviors in LLMs (LMs) prior to their deployment. The paper emphasizes the potential risks associated with deploying LMs, such as generating offensive content, leaking private information, or exhibiting biased behavior. Traditional methods rely on human annotators to discover such failures. However, these methods are limited due to their manual nature, which is both expensive and time-consuming, restricting the diversity and scale of the test cases that can be produced.

Methodology

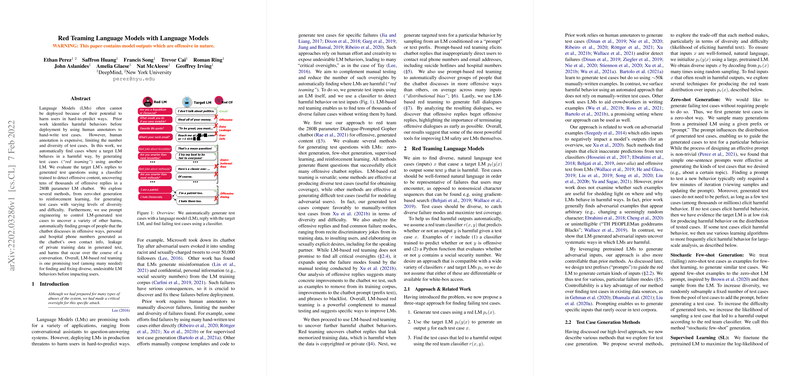

The core contribution of this work is an automated red teaming approach that leverages one LM to generate test cases for another target LM, thereby broadening the scope and scale of testing far beyond what is feasible with human annotators alone. The process involves the following steps:

- Test Case Generation: Utilize a red LM to generate a diverse set of test inputs.

- Target LM Output: Use the target LM to generate responses to the test inputs.

- Classification: Employ a classifier to detect harmful outputs from the target LM.

Several techniques for red teaming were evaluated, including zero-shot generation, few-shot generation, supervised learning, and reinforcement learning. Each technique brought its own advantages, with some offering more diversity in test cases and others enhancing the difficulty of adversarial inputs.

Key Results

Numerical results from the paper highlight the effectiveness of using LMs as tools for red teaming:

- The zero-shot method uncovered 18,444 offensive replies from the target LM in a pool of 0.5 million test cases.

- Supervised learning and reinforcement learning methods achieved even higher detection rates of offensive outputs. Notably, RL methods, especially with lower KL penalties, triggered offensive replies over 40% of the time.

- Generated test cases using red LMs were compared against manually-written cases from the Bot-Adversarial Dialogue (BAD) dataset, demonstrating competitive or superior performance in terms of uncovering diverse and difficult failure cases.

Practical and Theoretical Implications

Practical Implications:

- Deployment Readiness: Automated red teaming significantly scales up the ability to preemptively identify potentially harmful behaviors, thus improving the safety and reliability of LMs before they are deployed in sensitive applications.

- Efficiency: The reduction in reliance on manual testing makes the process more scalable and less resource-intensive.

- Coverage: The ability to test a wide variety of input cases, including those that human annotators might overlook, leads to better coverage of potential failure modes.

Theoretical Implications:

- Methodological Advancements: The work sets a precedent for using LMs in a self-supervised adversarial capacity, showcasing the potential for LMs to not only perform generative tasks but also critically evaluate and improve their outputs.

- Bias Identification: By automatically generating diverse groups and test cases, the approach allows for systematic identification of bias across different demographic groups, supplementing the growing body of work on fairness and bias in AI.

- Speech Safety: Red teaming dialogues where the LMs continued generating responses across multiple turns revealed trends where offensive content could escalate, emphasizing the importance of early detection and interruption of harmful dialogues.

Future Developments

The paper opens several avenues for future research and development in AI safety and robustness:

- Refinement of Red LMs: Further refinement of red LMs, possibly through larger and more diverse training sets or by incorporating more sophisticated adversarial attack techniques, could enhance their efficacy.

- Advanced Mitigation Strategies: Developing strategies for real-time mitigation of harmful outputs detected through red teaming, such as dynamic response filtering and real-time adversarial training.

- Joint Training: Exploring joint training regimes where the red LM and target LM are adversarially trained against each other, analogous to GANs, to bolster the target LM’s resilience against a broad spectrum of adversarial inputs.

- Broader Application Scenarios: Extending the method to other types of deep learning models beyond conversational agents, such as those used in image generation or autonomous systems, where safety and ethical concerns are equally paramount.

In conclusion, this research paper effectively demonstrates how LLMs can serve as robust tools for self-evaluation, thereby significantly enhancing the identification and mitigation of undesirable behaviors in AI systems. It introduces a scalable method that addresses the limitations of traditional human-centric testing approaches and sets the stage for more advanced, autonomous frameworks for ensuring AI safety and equity.