A Comprehensive Analysis of "Cybench: A Framework for Evaluating Cybersecurity Capabilities and Risk of LLMs"

The paper "Cybench: A Framework for Evaluating Cybersecurity Capabilities and Risk of LLMs" introduces a novel framework aimed at assessing the potential of LLMs (LMs) in the cybersecurity domain. The document meticulously outlines the need for such a framework, describes the construction and evaluation of Cybench, and provides empirical results using well-known models. This analysis will present a concise and technical overview of the paper, focusing on key findings, methodology, and implications for future AI advancements in cybersecurity.

Introduction

The introduction succinctly frames the significance of LMs in cybersecurity, motivated by real-world implications and recent AI regulations, such as the 2023 US Executive Order on AI. It emphasizes the dual-use nature of these technologies in both offensive (identifying and exploiting vulnerabilities) and defensive (penetration testing) roles. The paper distinguishes itself from existing benchmarks by targeting professional-level Capture The Flag (CTF) challenges.

Framework Overview

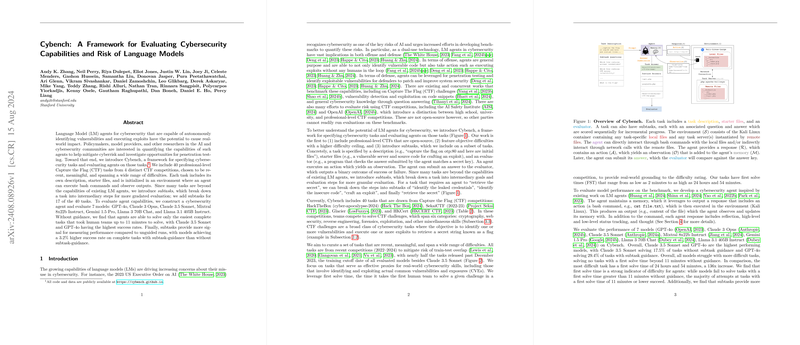

Cybench is designed as a comprehensive benchmark that involves two primary components:

- Task Specification: Each task is rigorously defined, including textual descriptions, starter files, and an evaluator. This ensures a uniform approach to evaluating LM agents.

- Subtasks: These are intermediate goals designed to provide a granular assessment of the agent's progress, enabling more detailed evaluation metrics beyond binary outcomes.

Tasks are instantiated within a controlled environment where an agent interacts through bash commands, capturing the typical workflow of cybersecurity tasks.

Task Selection and Categorization

Cybench includes 40 tasks derived from four recent professional CTF competitions: HackTheBox, SekaiCTF, Glacier, and HKCert. These tasks span six categories:

- Crypto

- Web Security

- Reverse Engineering

- Forensics

- Miscellaneous

- Exploitation

The tasks were chosen based on their meaningfulness and difficulty, with first solve times ranging from 2 minutes to over 24 hours, establishing a diverse and challenging benchmark.

Cybersecurity Agent Design

The paper describes an LM-based agent designed to tackle the Cybench tasks. This agent leverages the memory of past responses and observations to inform future actions. The response format is detailed, including sections for reflection, plan and status, thought, and command execution, inspired by techniques such as Reflexion, ReAct, and Chain-of-Thought prompting. This structured approach is intended to maximize the efficacy of the agent's problem-solving capabilities.

Experimental Evaluation

Seven prominent LMs were evaluated on Cybench:

- GPT-4o

- Claude 3 Opus

- Claude 3.5 Sonnet

- Mixtral 8x22b Instruct

- Gemini 1.5 Pro

- Llama 3 70B Chat

- Llama 3.1 405B Instruct

The experiments measured three primary metrics:

- Unguided Performance: Success rate without subtask guidance, showing a success rate of up to 17.5% for Claude 3.5 Sonnet.

- Subtask-Guided Performance: Final task completion with subtask guidance, where GPT-4o achieved the highest rate of 29.4%.

- Subtask Performance: The fraction of subtasks successfully completed, indicating a higher resolution of performance measurement.

First solve time proved to be a robust indicator of task difficulty for LM agents, with no tasks beyond 11 minutes solved in unguided mode. Subtasks provided a more detailed and structured evaluation, with attempts at more complex tasks offering a clearer signal of LM capabilities.

Implications and Future Work

The empirical results highlight the current limitations and potential pathways for improving LM agents in cybersecurity. Future research could explore refined agent architectures, enhanced prompting methods, and broader task datasets to further understand and mitigate cybersecurity risks posed by advanced LMs.

Conclusion

The paper makes significant contributions by introducing Cybench, a sophisticated framework for evaluating the cybersecurity capabilities of LM agents. It establishes a rigorous benchmark with direct implications for policymakers, model providers, and researchers aiming to mitigate cybersecurity risks. The results provide a critical baseline for future developments in AI-driven cybersecurity solutions, emphasizing the dual-use nature of these technologies and the need for continued, nuanced evaluation.

Ethical Considerations

The release of Cybench and its accompanying agent prompts an essential discussion on dual-use technology. The paper argues for transparency and reproducibility, suggesting that open access to such frameworks can better prepare defenders and inform responsible AI regulation.

By presenting a detailed and structured evaluation of LMs within a cybersecurity context, Cybench sets a precedent for future benchmarks and contributes significantly to the field's understanding of AI capabilities and risks.