Interpretable Mental Health Analysis on Social Media Using LLMs

The paper "MentaLLaMA: Interpretable Mental Health Analysis on Social Media with LLMs" presents a significant advancement in the field of NLP concerning mental health analysis. The research introduces MentaLLaMA, an open-source series of LLMs specifically designed to address the challenge of interpretable mental health analysis on social media platforms. This work is critically timely, given the increasing reliance on digital communication and the essential need for accurate mental health assessment and intervention tools.

Key Contributions and Methodology

This paper addresses the limitations of traditional discriminative methods, which are typically plagued by poor generalization ability and low interpretability. Instead, it leverages recent developments in LLMs, which have shown promise in generating detailed, human-like text explanations. These capabilities are crucial for mental health analysis where understanding the underlying reasoning is as important as the prediction itself.

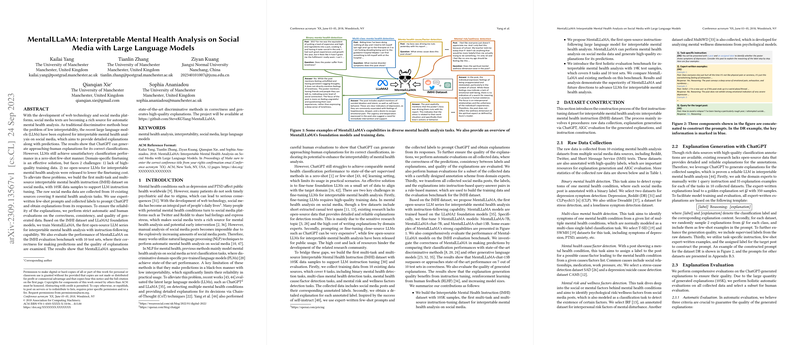

The authors introduce the Interpretable Mental Health Instruction (IMHI) dataset, which comprises 105,000 data samples collected from ten different social media resources. This dataset is meticulously constructed to encompass a diverse range of mental health tasks including depression detection, stress analysis, and exploring the underlying causes of mental health conditions. By prompting ChatGPT with expert-crafted queries, the team ensured that the generated explanations are both contextually accurate and informative. Such rigorous standards in dataset construction likely contribute to the model's success.

Strong Numerical Findings and Evaluation

MentaLLaMA models demonstrate impressive performance, approaching the currently leading, albeit opaque, discriminative methods in terms of predictive correctness. Notably, they generate explanations for mental health analyses that are on par with human-level evaluations. The robustness of these models extends to their generalizability, consistently performing well across unseen tasks.

In terms of evaluation, the authors employed a holistic benchmark, considering both the correctness of predictions and the coherence and relevance of the generated explanations. The evaluative lens utilized encompasses automatic evaluations supplemented by human annotations, ensuring the reliability and relevance of the generated insights. The MentaLLaMA-chat-13B model in particular stands out, achieving a balance of high correctness and quality in explanation generation.

Implications and Future Directions

The work presents notable implications for both theoretical advancements and practical applications in AI. By formalizing mental health analysis as a text generation task, it opens new avenues for utilizing LLMs in sensitive domains where interpretability is key. The public availability of the MentaLLaMA models ensures that future research on mental health can continue to benefit from and build upon this foundational work.

Practically, MentaLLaMA models hold potential for significant contributions to public health by providing initial assessments based on social media activity, thereby flagging potential mental health issues for further professional review. Future research may explore fine-tuning these models with domain-specific texts to enhance their contextual understanding and professionality, particularly in medical or psychological applications.

Despite these advances, the authors acknowledge the limitations associated with current models’ domain-specific knowledge, suggesting opportunities for further enhancement through continual pre-training on specialized data. Moreover, the development of more aligned automatic evaluation metrics for interpretability remains a promising area of exploration.

Conclusion

In summary, this paper effectively bridges the gap between the growing need for mental health analysis tools and the current capabilities of LLMs. By providing interpretable, high-quality insights from social media texts, MentaLLaMA stands as an open-source benchmark in the field. Its implications are wide-reaching, with the potential to not only enhance public health interventions but also inform future research in the design and deployment of AI models in sensitive contexts.