Towards Interpretable Mental Health Analysis with LLMs

The paper "Towards Interpretable Mental Health Analysis with LLMs" conducts a comprehensive empirical paper on the capabilities of LLMs, specifically ChatGPT, for mental health analysis and emotional reasoning. This paper evaluates LLMs across multiple datasets and tasks, identifying limitations in current approaches and suggesting enhancements through improved prompt engineering.

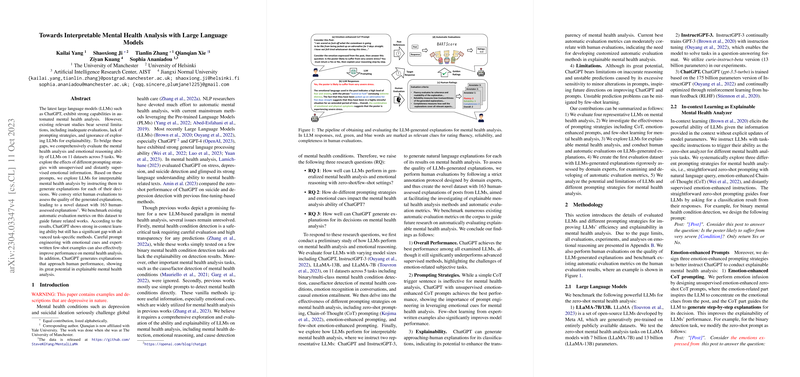

The authors focus on three main research questions: the general performance of LLMs in mental health analysis, the impact of prompting strategies on these models, and the ability of LLMs to generate interpretable explanations for their decisions. The paper evaluates four prominent LLMs, including ChatGPT, InstructGPT-3, and LLaMA-7B/13B, using eleven datasets covering binary and multi-class mental health condition detection, cause/factor detection, emotion recognition in conversations, and causal emotion entailment.

Key Findings and Results

- Overall Performance: ChatGPT demonstrates superior performance compared to other benchmark LLMs, such as LLaMA and InstructGPT-3, across all tasks. However, it still significantly underperforms when compared to state-of-the-art supervised methods, indicating challenges in emotion-related and subjective tasks.

- Prompting Strategies: The paper highlights that prompt engineering is crucial for enhancing the mental health analysis capabilities of LLMs. The adoption of emotion-enhanced Chain-of-Thought (CoT) prompting strategies considerably boosts ChatGPT’s performance. Few-shot learning with expert-written examples further increases efficacy, particularly for complex tasks.

- Explainability: ChatGPT shows promise in generating near-human explanations for its predictions, underlining its potential for explainable AI applications in mental health. However, the paper also addresses limitations tied to ChatGPT’s sensitivity to prompt variations and inaccuracies in reasoning, which can lead to unstable or erroneous predictions.

- Automatic Evaluation: The authors develop a newly annotated dataset, facilitating evaluations of LLM-generated explanations. They benchmark automatic evaluation metrics against human annotations, finding that existing metrics correlate moderately with human judgements but require further customization for explainable mental health analysis.

Methodological Approaches

The paper employs various prompts, including zero-shot, emotion-enhanced, and few-shot variants, to examine the effect of context provision on model performance. The authors further perform strict human evaluations, creating a novel dataset of explanations, which serves as a foundation for future work on explainability in mental health analysis.

Implications and Future Work

The paper demonstrates the potential of LLMs, especially ChatGPT, in tasks requiring sophisticated emotional comprehension and decision-making transparency. This research serves as a call to action for further development in prompt engineering, the incorporation of expert knowledge, and potentially domain-specific fine-tuning of LLMs, which could address existing limitations in emotional reasoning and prediction stability.

The implications for AI in mental health are significant, pointing toward future AI systems that can support healthcare professionals by providing accurate and interpretable analyses of mental health conditions. Nonetheless, the paper acknowledges ethical considerations and emphasizes the need for caution in deploying such systems in real-world scenarios due to their current limitations.

Overall, this research makes substantial contributions to the field by evaluating the explainability and generalization capabilities of LLMs within the context of mental health, highlighting the importance of emotional cues and the potential for AI to contribute positively in this sensitive and impactful domain.