Explainability for LLMs: A Survey

The paper "Explainability for LLMs: A Survey" provides a structured taxonomy and overview of techniques for explaining Transformer-based LLMs. This is undertaken in light of the fact that while LLMs such as BERT, GPT-3, and GPT-4 have demonstrated outstanding capabilities in diverse natural language processing tasks, the complexity of their inner workings continues to pose potential risks when deployed in downstream applications. The opaque nature of LLMs necessitates critical approaches to interpretability, as elucidated in the paper.

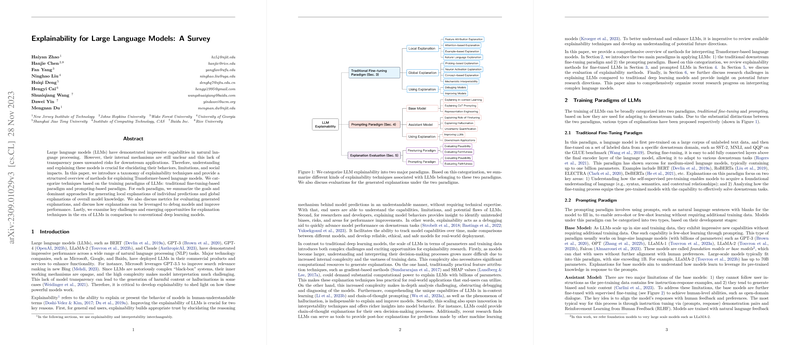

Taxonomy and Training Paradigms

The authors categorize explainability strategies based on two principal LLM training paradigms: the traditional fine-tuning-based paradigm and the prompting-based paradigm. In the fine-tuning paradigm, models are pre-trained on a broad corpus and then fine-tuned for specific tasks, while the prompting paradigm leverages pre-trained models to generate predictions through contextual prompts without additional downstream training.

Local and Global Explanation Techniques

For each paradigm, methods for local (instance-specific) and global explanations are reviewed. Local explanation techniques discussed include feature attribution methods (perturbation-based and gradient-based methods), attention visualization and analysis, and instance-specific example-based explanations like adversarial examples. Conversely, global explanations aim to unveil broader behaviors of LLMs using approaches such as probing methods, neuron activation analysis, and concept-based explanations. These techniques help in identifying the linguistic properties and knowledge encoded within the models.

Usage and Future Directions

The paper also considers how explanations can aid in debugging and improving model performance. Explanation-based debugging helps identify biases such as over-reliance on spurious correlations, while explanation-based model improvement techniques can contribute to better robustness and generalization in model predictions. Furthermore, the paper explores the impact of model explainability on responsible AI practices, emphasizing the need for techniques that align with ethical guidelines and ensure reliability and transparency in model outputs.

Evaluation Challenges

Evaluating LLM explainability remains a formidable challenge. The paper discusses methods for evaluating the faithfulness and plausibility of explanations but acknowledges that generating universally accepted ground truths for evaluation is difficult. The paper points out that without standardized criteria, comparing the effectiveness of various explainability techniques can often be problematic.

Implications for AI Research

The targeted approach in explaining LLMs delineates both practical and theoretical implications in AI research. The proliferation of these models in sensitive applications like healthcare, finance, and legal domains underscores the urgency of developing robust explainability frameworks. Furthermore, as LLMs significantly impact content generation, their predictions must be ethical and intelligible to align with societal values.

In synthesizing current explainability techniques with potential future directions, this paper not only presents an exhaustive guide to contemporary approaches but also underlines open research challenges in areas like attention redundancy, shortcut learning, and understanding emergent capabilities of LLMs. These insights are pivotal for steering future research efforts towards truly interpretable AI systems.