Evaluating Human Alignment and Model Faithfulness of LLM Rationale

The paper "Evaluating Human Alignment and Model Faithfulness of LLM Rationale" addresses a critical aspect of interpretability within the field of LLMs, specifically focusing on how well these models can explain their predictions through rationales. Rationales are defined as sets of tokens from input texts that reflect the decision-making process of the LLMs. The paper evaluates these rationales based on two key properties: human alignment and model faithfulness. Through extensive experimentation, the authors compare attribution-based and prompting-based methods of extracting rationales.

Methodology Overview

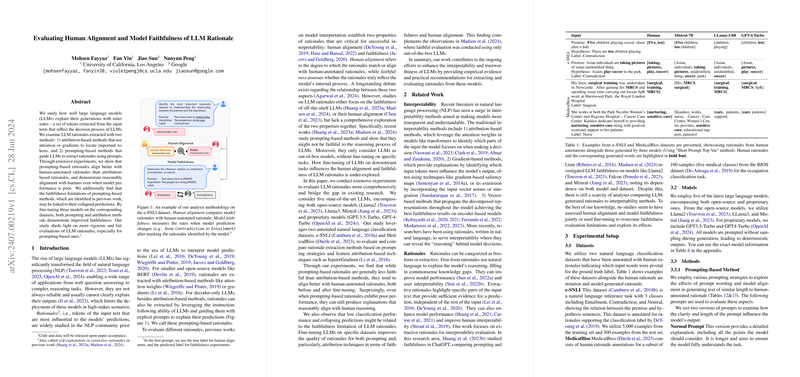

The paper employs five state-of-the-art LLMs, both open-source (e.g., Llama2, Llama3, Mistral) and proprietary (e.g., GPT-3.5-Turbo, GPT-4-Turbo), across two annotated datasets: e-SNLI and MedicalBios. Attribution-based methods leverage inner model mechanisms like attention weights and input gradients to locate important tokens. In contrast, prompting-based methods use carefully crafted prompts to guide the LLMs in generating rationales.

Alignment and Faithfulness Evaluation

The human alignment of rationales is measured by comparing them with human-annotated rationales using the F1 score. In contrast, model faithfulness is evaluated through perturbation-based experiments which measure the flip rate—the frequency of changes in model predictions when identified important tokens are masked.

Key Findings

- Prompts vs. Attribution Methods: Prompting-based methods generally outperform attribution-based methods in human alignment across both datasets and models. Specifically, tailored prompts (short or normal) resulted in superior alignment scores. However, these prompting methods showed variability across different models and datasets, highlighting the sensitivity of these approaches to prompt design.

- Fine-tuning Effects: Fine-tuning LLMs on specific datasets significantly improves both human alignment and faithfulness. This is particularly notable for models like Llama-2 and Mistral, which displayed near-random performance on the e-SNLI dataset when used out-of-box. Fine-tuning increases the ability of models to align with human rationales and lead to more faithful rationales as assessed by perturbation experiments.

- Faithfulness Limitations Pre-Fine-tuning: Before fine-tuning, the models displayed minimal changes in prediction when important tokens in the input sentences were masked, suggesting a lack of genuine interpretability. This limitation is attributed to models focusing disproportionately on instructional tokens rather than the input text itself.

- Comparative Faithfulness: After fine-tuning, attribution-based methods generally provided more faithful rationales compared to prompting-based methods. Additionally, human rationales induced higher flip rates than model-generated rationales, which underscores the gap in interpretability and the potential for further method improvements.

Implications and Future Directions

The results of this paper have significant implications for both the practical deployment and theoretical understanding of LLMs:

- Deployment in High-Stakes Scenarios: The inability of LLMs to provide human-aligned and faithful rationales precludes their reliable deployment in high-stakes applications. This necessitates strategies for better fine-tuning and potentially new methods of rationale extraction.

- Refinement of Explanation Methods: There is a clear need for refining prompting strategies and developing new attribution-based methods that can more accurately capture and convey the decision-making processes of LLMs.

- Investigating Instruction Adherence: The reliance on instructional tokens reveals a deeper issue with how LLMs parse and prioritize different parts of input. Future research should explore methods that encourage models to focus more on the input text and less on repeated instructional cues.

Conclusion

This comprehensive survey of LLM rationales, focusing on human alignment and faithfulness, provides crucial insights and diagnostic evaluations. By highlighting both the strengths and limitations of existing methods, this paper lays the groundwork for future advancements in making LLMs not only powerful but also transparent and trustworthy. Fine-tuning emerges as a pivotal step in improving model interpretability, suggesting that continuous development in this direction will be vital for the more responsible use of LLMs.

In summary, the paper "Evaluating Human Alignment and Model Faithfulness of LLM Rationale" represents a significant empirical analysis that addresses current pitfalls in the interpretability of LLMs, providing a pathway for subsequent research efforts and practical improvements in model deployment.