Introduction to Interpretability and LLMs

Interpretable machine learning has become an integral part of developing effective and trustworthy AI systems. With the emergence of LLMs, there is now an unprecedented opportunity to reshape the field of interpretability. LLMs, with their expansive datasets and neural networks, outperform traditional methods in complex tasks and provide natural language explanations that can communicate intricate data patterns to users. However, these advancements come with their own set of concerns such as the generation of incorrect or baseless explanations (hallucination) and substantial computational costs.

Rethinking Interpretation Methods

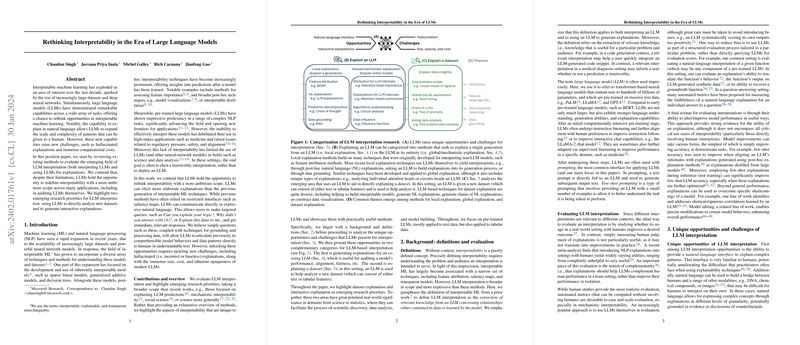

The paper under consideration expounds on the dual role of LLMs—both as objects of interpretability and as tools for generating explanations of other systems. Traditional techniques offer insights into predictions made by models, like feature importance, but they present limitations, especially when evaluating complex (and often opaque) LLM behaviors. Crucially, the approach of soliciting direct natural language explanations from LLMs opens the door to user-friendly interpretations without complex technical jargon. Nevertheless, to leverage this capability, one must confront new issues like ensuring the validity of LLM explanations and managing the prohibitive size of state-of-the-art models.

Challenges and New Research Avenues

The authors underscore the need to develop effective solutions to combat hallucinated explanations, which can mislead users and erode trust in AI systems. Moreover, they emphasize the importance of creating accessible and efficient interpretability methods for LLMs that have grown beyond the capacity for conventional analysis techniques. The paper neatly categorizes research into explaining a single output from an LLM (local explanation) and understanding the LLM as a whole (global or mechanistic explanation). Notably, cutting-edge LLMs can integrate explanation directly within the generation process, yielding more faithful and accurate reasoning through techniques such as chain-of-thought prompting. Another focal area is dataset explanation, where LLMs help analyze and elucidate patterns within datasets, potentially transforming areas like scientific discovery and data analysis.

Future Priorities and Conclusion

The paper concludes by spotlighting matters vital to advancing interpretability research. These include bolstering explanation reliability, fostering dataset explanation for genuine knowledge discovery, and developing interactive explanations that align with specific user needs. The future trajectory of LLMs in interpretability hinges on addressing these challenges; strategic emphasis in these areas could accelerate the progression towards reliable, user-oriented explanations. As the complexity of available information grows, so too does the significance of LLMs in translating this complexity into comprehensible insights, promising a new chapter in the synergy between AI and human understanding.

Overall, this paper illustrates not merely incremental improvements but a paradigm shift in how we conceptualize and leverage interpretability in the age of LLMs, with vast implications for the broader AI industry and numerous high-stakes domains.