A Comprehensive Survey on Instruction Tuning for LLMs

Introduction to Instruction Tuning

Instruction Tuning (IT) represents a pivotal advancement in the domain of LLMs, focused on refining these models to better adhere to human instructions. This process aligns the intrinsic next-word prediction paradigm of LLMs with the explicit directive-following desired by end-users. Through IT, a model is further trained on a dataset comprising instruction-output pairs in a supervised manner, thus narrowing the gap between the model's predictive behavior and the fulfiLLMent of user intents.

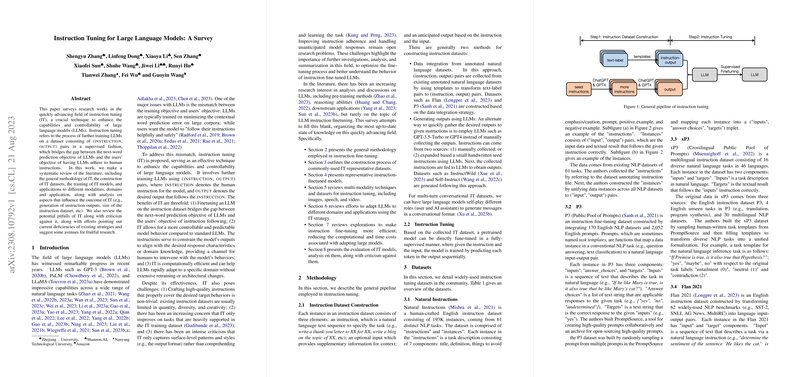

Methodology Overview

The core of IT involves constructing instruction datasets, each entry composed of an instruction (a natural language directive), an optional input providing context, and a designated output correlatively following the instruction. Two primary methods for dataset construction have emerged: integrating annotated natural language data through transformation and generating outputs directly with LLMs for quick accumulation. IT employs a fine-tuning strategy on these constructed datasets, guiding LLMs to produce outputs sequentially based on given instructions and inputs.

Datasets and Models

Instruction Tuned models exhibit qualitative improvements across a spectrum of tasks by leveraging datasets like Natural Instructions, P3, FLAN, and others. These datasets differ in their construction methodologies, ranging from manual curation to generative approaches. Representative models such as InstructGPT and BLOOMZ demonstrate the efficacy of IT by showing notable performance boosts both in automatic evaluations and human-rated assessments, against their non-IT counterparts.

Multi-Modality and Domain-Specific Applications

IT extends beyond text to embrace multimodal data, including images, speech, and video. Datasets like MUL-TIINSTRUCT and PMC-VQA facilitate the exploration of IT in these domains. Similarly, domain-specific applications of IT span a wide array, from medical diagnosis with Radiology-GPT to creative writing assistance through models like CoPoet and Writing-Alpaca-7B.

Analysis and Future Directions

Despite the proven effectiveness of IT, challenges remain, particularly in crafting high-quality instructions covering desired behaviors comprehensively. Additionally, concerns arise regarding IT's scope of improvement being limited to tasks heavily featured in the training dataset. Moreover, the exploration into whether IT models genuinely grasp task specifics or merely the surface patterns warrants further examination.

Efficient tuning techniques such as LoRA and Delta-tuning offer promising directions to alleviate computational burdens, enabling the adaptation of LLMs with reduced parameter tuning. These methodologies underscore the potential of fine-tuning in low-rank subspaces and using optimal controllers for parameter updates, respectively.

Conclusion

Instruction Tuning emerges as a transformative approach in enhancing the capabilities and controllability of LLMs, tailoring them more closely to human instructions across a diverse range of tasks and modalities. While promising, the journey of IT, from dataset construction to technique development, points to an evolving landscape with vast potential for innovation, specifically in achieving deeper task comprehension and extending efficiency in fine-tuning practices. Future research directions could pivot towards addressing current limitations and exploring the untapped potential of IT in uncharted domains and applications.