A Critical Evaluation of the Knowledge Capacity of LLMs: An Analytical Perspective

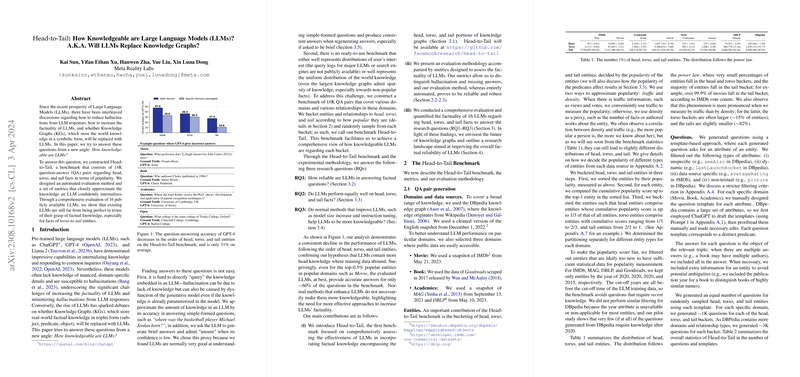

This paper, authored by Kai Sun, Yifan Ethan Xu, Hanwen Zha, Yue Liu, and Xin Luna Dong, presents an analytical investigation into the knowledge capacity of LLMs and explores the contentious question of whether LLMs could supplant Knowledge Graphs (KGs) in storing and applying factual knowledge. It introduces a novel benchmark named for evaluating the knowledge competence of LLMs and attempts to provide a structured methodology for understanding how much factual information these models successfully incorporate.

Core Contributions and Methodology

The benchmark is comprehensive, encompassing 18,000 question-answer pairs drawn from diverse domains. This dataset categorizes entities into head, torso, and tail buckets based on their popularity, allowing for an evaluation of LLM knowledge across different levels of entity visibility in data. The paper fairly scrutinizes 14 publicly available LLMs using this benchmark, presenting a quantified assessment of their factual grasp.

The evaluation employs specially curated metrics, primarily focusing on accuracy, hallucination rate, and missing rate. These metrics are designed to discern between correct answers, incorrect answers due to hallucinations, and admissions of uncertainty by the models, providing a nuanced view of LLM capabilities.

Key Findings

The paper uncovers several pivotal insights:

- LLM Knowledge Deficiency: Contrary to expectations of comprehensive knowledge retention, the investigated LLMs often falter with nuanced, domain-specific information. Models demonstrated lower accuracy across the board, especially with torso and tail facts, challenging their ability to supplant the factual storage potential of KGs.

- Model Performance Variations: There is a conspicuous decline in LLM performance from head entities toward tail entities, confirming the hypothesis that LLMs are better at integrating commonly available (head) knowledge than rare (tail) knowledge. This reflects training data limitations, indicative of the power law distribution in real-world data representation.

- Impact of Instruction Tuning and Model Size: Larger model sizes and common enhancement techniques like instruction tuning were observed not to significantly bolster the factual reliability of LLMs. This calls for new strategies beyond traditional scaling and tuning approaches to address knowledge gaps effectively.

Implications for Future Research

The paper offers insightful strategic directions for future research in Knowledge Representation and AI. It proposes the concept of Dual Neural Knowledge Graphs, envisioning a hybrid approach that marries explicit KGs with implicit knowledge embeddings in LLMs. This dual structure promises to balance human-readable symbolic knowledge and machine-optimizable neural representations, potentially revolutionizing knowledge retrieval systems.

Conclusion

In summary, this paper provides a rigorous evaluation of LLMs’ knowledge retention abilities, challenging the narrative that LLMs might soon replace KGs. It highlights substantial limitations in the factual knowledge embedded in these models, especially for long-tail information. The introduction of the benchmark and the methodological framework serves as an essential resource for future studies. It amplifies the discourse on LLM knowledge integration, compelling researchers to explore innovative architectures and learning paradigms that bridge symbolic and neural knowledge aspects effectively.