Automatic Question-Answer Generation for Long-Tail Knowledge: Challenges and Implications

Introduction to Long-Tail Knowledge in QA Systems

The advent of LLMs such as GPT-3 has significantly advanced the field of natural language processing, particularly in open-domain Question Answering (QA). Despite their broad knowledge base, LLMs still face challenges when dealing with rare or 'long-tail' knowledge — concepts and entities not frequently covered in their training data. This limitation hinders the broader application of LLMs in diverse domains where specialized knowledge is crucial. This paper, authored by researchers from Carnegie Mellon University, introduces an automatic approach to generate Question and Answer (QA) datasets targeting these long-tail entities and discusses the inherent challenges and future implications of this endeavor.

Generating QA Datasets for Tail Entities

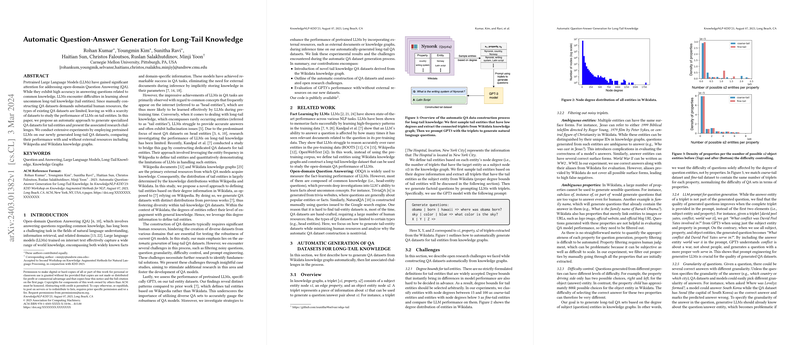

The paper proposes a novel framework to automatically construct specialized QA datasets using degree information from Wikidata knowledge graphs, distinguishing itself from previous methods that relied heavily on Wikipedia. The significance of degree information (i.e., the number of connections an entity has within Wikidata) is highlighted as a more refined metric to identify tail entities, which are underrepresented in existing datasets.

The process of automatic QA dataset generation encounters several challenges:

- Selection of Degree Bounds for Tail Entities: Defining what constitutes a tail entity is not straightforward. This paper categorizes entities with specific degree bounds into 'coarse-tail' and 'fine-tail' entities for experimental purposes.

- Filtering Noisy Triplets: Ensuring the clarity and relevance of the questions generated from Wikidata triplets necessitates filtering out ambiguous entities and properties, which is not an easily automatable task.

- Difficulty Control and Prompt Engineering: Balancing question difficulty and crafting effective LLM prompts are critical for generating meaningful QA pairs.

- Granularity of Questions and Answers: Accounting for the varying levels of detail within correct answers poses additional complications.

Through extensive experimentation, the researchers generated new datasets showcasing distinct distributions and posing different challenges from existing QA datasets.

Evaluating LLMs with External Resources

The performance evaluation of GPT-3 on the newly generated datasets revealed a consistent struggle with tail entity questions, underscoring the model's limitations in accessing rare knowledge. Further investigation into augmenting GPT-3 with external resources — specifically, retrieving relevant documents using Dense Passage Retrieval (DPR) from Wikipedia and leveraging additional Wikidata knowledge graphs — was conducted to understand if these could mitigate the model's shortcomings.

Surprisingly, augmenting with DPR alone led to decreased performance due to the irrelevance of the retrieved documents, highlighting the gap in retrieving long-tail knowledge even with state-of-the-art retrieval systems. However, a combined approach of using DPR with ranking adjustments based on Wikidata knowledge graphs showed promise, enhancing both DPR retrieval accuracy and GPT-3's QA performance.

Implications and Future Directions

This paper's findings have substantial implications for the development and evaluation of QA models, particularly emphasizing the urgent need for better handling of long-tail knowledge. The challenges identified in automatically generating QA datasets pinpoint areas requiring further investigation and innovation. Moreover, the exploration of external resources to improve LLM performance opens avenues for research into more sophisticated integration methods that can leverage disparate knowledge sources effectively.

In conclusion, addressing the long-tail knowledge problem in QA systems is crucial for the advancement of LLMs and their application across diverse domains. This paper marks a significant step towards understanding and overcoming these challenges, with the potential to inspire a wide range of future research in AI and natural language processing.