Understanding the Intersection of LLMs and Knowledge Graphs

The Relationship Between LLMs and KGs

In the world of artificial intelligence, LLMs have become increasingly sophisticated. LLMs such as ChatGPT have made strides in understanding and generating human-like text. These models have been trained on vast amounts of data, allowing them to produce content that is often coherent and contextually relevant. However, when it comes to factual accuracy and knowledge-based content generation, LLMs appear to have limitations. They generally do well with the information they were trained on but struggle to recall, apply, or update knowledge that wasn't covered in their training sets.

This is where knowledge graphs (KGs) come into play. KGs are structured databases of real-world facts and relationships and offer an explicit representation of knowledge. They store and present information in a way that is not only accessible but can be easily updated and maintained. As a result, KGs have inherent advantages for tasks requiring factual correctness and up-to-date information.

Enhancing LLMs with Knowledge Graphs

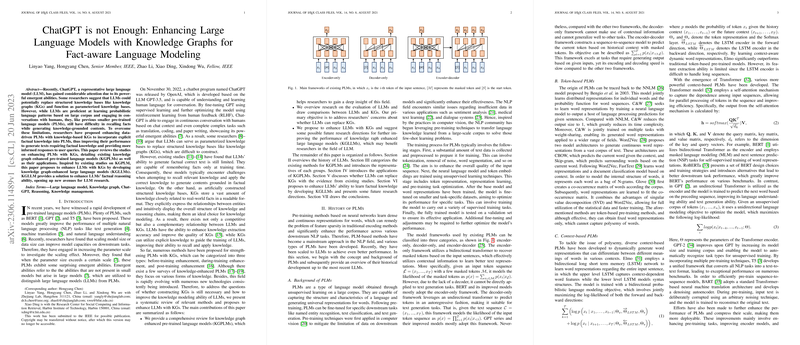

Enhancing LLMs with knowledge from KGs — termed here as knowledge graph-enhanced pre-trained LLMs (KGPLMs) — has been proposed to overcome the limitations of LLMs in factual content generation. The integration of KGs into LLMs is expected to improve the models’ abilities to reason with facts, thus producing more informed and accurate responses.

Approaches to Integrating KGs into LLMs

KGPLMs integrate knowledge into LLMs in various ways.

- Before-training Enhancement: This approach introduces KG data before the model training process begins, essentially preprocessing the text to include KG information or adjusting the input dataset to be more knowledge-rich.

- During-training Enhancement: Here, the model architecture itself is altered or special knowledge-processing components are introduced during training to enable the model to learn directly from both the textual data and the KGs concurrently.

- Post-training Enhancement: In this phase, LLMs are fine-tuned using domain-specific knowledge and tasks, which enhances the model's ability to perform in specific applications that need detailed expert knowledge.

Applications of Knowledge-Infused LLMs

Applications of KGPLMs are vast and range across several tasks:

- Named Entity Recognition: KGPLMs can more effectively identify specific entities within the text, improving the extraction of usable data from unstructured sources.

- Relation Extraction: They can better understand and categorize relationships between entities, which enhances comprehension and response accuracy.

- Sentiment Analysis: By integrating sentiment-specific knowledge into the models, their ability to interpret emotions and opinions is significantly improved.

- Knowledge Graph Completion: KGPLMs can help to fill in missing information in KGs, thereby expanding and refreshing the stored knowledge.

- Question Answering: Utilizing KGs allows for structured reasoning and more precise answers to complex questions.

- Natural Language Generation: Integrating KGs with LLMs can lead to more relevant, factual, and contextually grounded content.

The Future: LLMs Enhanced with Knowledge Graphs

While LLMs have shown potential as knowledge bases, their ability to serve as reliable sources of information is questioned. They often default to probabilistic language patterns acquired during training rather than solid factual recall. By incorporating KGs, however, we can begin to build more robust, factual, and reliable LLMs, leading to what are being referred to as knowledge graph-enhanced LLMs (KGLLMs).

Developing effective KGLLMs requires careful consideration, such as selecting valuable knowledge to incorporate and methods to avoid the loss of previously learned information. It is also critical to explore ways to improve model interpretability and assess their performance on domain-specific tasks.

Conclusion: The Symbiosis of LLMs and KGs

As LLMs evolve, it becomes increasingly clear that their full potential can only be unlocked through the integration of structured knowledge from KGs. The marriage of LLMs and KGs does not suggest the obsolescence of either; rather, it heralds a new phase of symbiotic development. KGs provide the foundation of fact-based reasoning that LLMs need to become truly intelligent systems, while LLMs offer flexible, self-updating mechanisms that keep the knowledge within KGs fluid and accessible. Together, they will redefine the possibilities for artificial intelligence in processing and generating human language.