Introduction

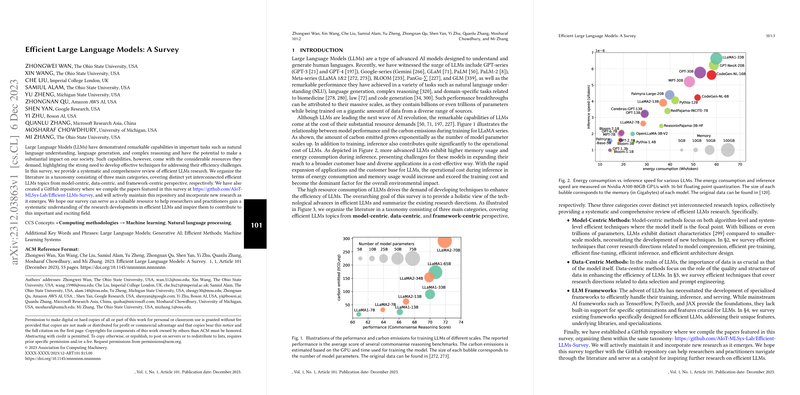

LLMs have significantly advanced the field of natural language processing. Their success in various tasks, however, is matched by their substantial computational and resource demands. The increasing scale of LLMs necessitates a critical examination of efficiency from both an algorithmic and systemic perspective. The paper at hand delineates a comprehensive survey of methodologies focused on enhancing the efficiency of LLMs, which is paramount for facilitating broader and more cost-effective applications.

Model-Centric Methods

Model Compression Techniques

The compression of LLMs is pivotal for mitigating their resource intensiveness. The survey categorizes model compression into quantization, parameter pruning, low-rank approximation, and knowledge distillation.

Quantization methods are employed post-training or during training (QAT) to compress model weights. Innovations like LLM.int8(), GPTQ, and AWQ aggressively pursue reduced precision levels while maintaining model performance.

Parameter Pruning strategies, delineated into structured and unstructured pruning, selectively eliminate weights, reflecting the model's architecture or individual parameters.

Low-rank Approximation techniques approximate weight matrices with low-rank matrices, hence reducing parameters and computational burden. TensorGPT and ZeroQuant-V2 epitomize this category.

Knowledge Distillation involves training compact student models that emulate the performance of the larger teacher LLMs. Methods like Baby Llama and GKD underscore the diversity within this area.

Efficient Pre-Training and Fine-tuning

Efficient pre-training methodologies vary from mixed precision acceleration, which leverages lower precision to balance computational costs and accuracies, to scaling models, initialization techniques, and optimization strategies like Adam or Sophia that enhance pre-training speed and efficiency.

Fine-tuning bestows LLMs with task-specific knowledge while conserving resources. Technologies like LoRA and prefix-tuning introduce minimal trainable parameters, while memory-efficient approaches like Selective Fine-Tuning significantly trim down GPU memory usage.

Efficient Inference Strategies

These entail speculative decoding, KV-cache optimization, and sharing-based attention acceleration. Speculative decoding forecasts token sequences with smaller models, truncating inference time, whereas KV-cache optimization curtails redundant KV pair computation during autoregressive decoding.

Sharing-based attention acceleration methods like MQA or GQA share key and value transformation matrices among different attention heads, optimizing computational overhead.

Efficient Architecture Design for LLMs

Current strategies for long-context LLMs include Extrapolation and Interpolation, which aim to extend model performance to longer sequences beyond training. For instance, methods like ALiBi employ attention with linear biases to retain input lengths within pre-trained constraints. Other tactics like Recurrent Structure and Window & Stream Structure focus on segmenting texts into manageable chunks or adopting recurrence to retain long-term context information.

Data-Centric Methods

Data Selection

Precision in data selection enables more effective and efficient LLMs pre-training and fine-tuning. Strategies encompass unsupervised and supervised methods for performance enhancement and embrace techniques to optimize for instruction quality and example ordering.

Prompt Engineering

The field of prompt engineering is an emerging avenue for efficiency wherein few-shot prompting, guided by self-instruction and CoT techniques, encourages deeper reasoning with fewer samples. Prompt compression strives to encapsulate prompt information compactly, and prompt generation automates the creation of effective prompts to steer model output.

LLM Frameworks

Frameworks designed to support LLMs must consider their scale and complexity. DeepSpeed and Megatron stand out for integrating optimizations for both training and inference, while Alpa and ColossalAI focus on auto-tuning and parallel execution. Frameworks like vLLM and Parallelformers wield an inference-centric approach.

Concluding Remarks

The paper encapsulates a rich spectrum of innovative methods and frameworks to elevate the efficiency of LLMs. It not only synthesizes current research into a coherent taxonomy but also catalyzes further exploration into this expanding horizon. By doing so, it opens the door to the democratization and widespread adoption of LLMs across various applications.