EmbodiedBench: A Benchmark for Evaluating Multi-modal LLMs as Vision-Driven Embodied Agents

The paper introduces EmbodiedBench, a comprehensive benchmark designed to assess the capabilities of Multi-modal LLMs (MLLMs) in vision-driven embodied agents. While LLMs and their role in language-centric tasks have been extensively studied, the paper addresses the under-explored area of MLLMs, especially concerning their application in tasks that require an understanding of both language and vision.

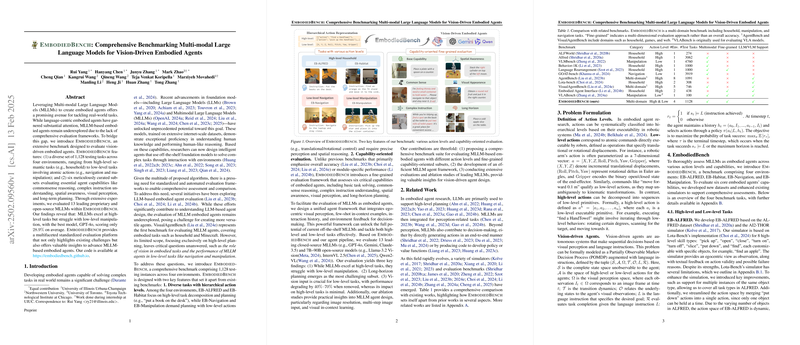

EmbodiedBench evaluates MLLMs across 1,128 diverse tasks distributed among four distinct environments: EB-ALFRED, EB-Habitat, EB-Navigation, and EB-Manipulation. These environments test the agents across a spectrum of scenarios requiring both high-level semantic understanding and low-level action execution. The paper proposes a structured evaluation framework to assess distinct capabilities in embodied agents, including commonsense reasoning, spatial awareness, and long-term planning.

The authors conduct extensive experiments on 13 leading proprietary and open-source MLLMs. Their results indicate that while current MLLMs perform well on high-level semantic tasks, they struggle significantly with low-level manipulation tasks. Notably, GPT-4o achieves an average score of 28.9% in low-level tasks, highlighting substantial room for improvement. Further, vision input is shown to be critical for the success of low-level tasks, with performance dropping by 40% to 70% when vision input is removed for tasks involving precise perception and spatial reasoning.

The implications of these findings are two-fold. Practically, they emphasize the need for more refined MLLM architectures that can effectively handle both high-level reasoning and low-level control. Theoretically, they invite further research into improving MLLMs' capabilities in spatial reasoning and manipulation. Future developments could involve better-integrating spatial information with LLMs to enhance performance in tasks requiring complex visual and spatial understanding.

A key contribution is the introduction of capability-oriented evaluation which allows for fine-grained assessments of multi-modal agents. This aspect is crucial for developing models tailored to specific embodied AI applications. The benchmark is expected to inspire future research directions, focusing on enhancing the adaptability of MLLMs in real-world environments, facilitating interactions that involve both linguistic and visual inputs.

In conclusion, EmbodiedBench provides a significant step toward understanding and improving the performance of MLLMs as embodied agents. By highlighting the current models' limitations, particularly in low-level manipulation, the research sets a clear agenda for future developments in this evolving field.