An Expert Analysis of BLIVA: Enhancing Multimodal LLMs for Text-Rich Visual Question-Answering Tasks

The paper "BLIVA: A Simple Multimodal LLM for Better Handling of Text-Rich Visual Questions," offers a significant contribution to the field of Vision LLMs (VLMs) by extending LLMs with an enhanced comprehension of text within visual contexts. The challenge of integrating textual information present within images into LLMs remains a critical barrier in the deployment of these models in real-world applications, such as textual interpretation on road signs, product labels, or document images.

Novel Contributions

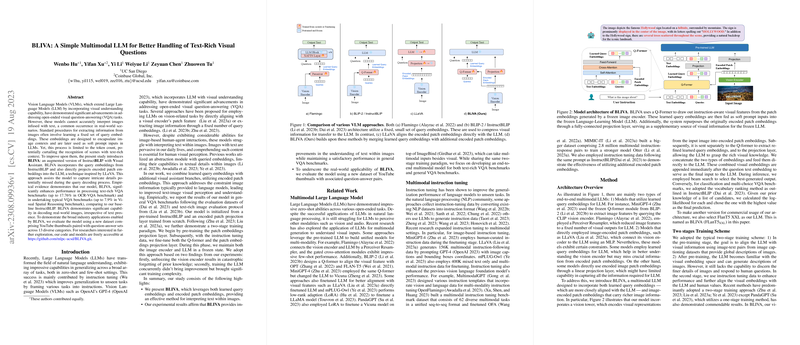

The work introduces BLIVA, a sophisticated adaptation of the InstructBLIP model, incorporating a Visual Assistant mechanism by blending query embeddings and encoded patch embeddings. This dual embedding strategy facilitates a deeper context capture from text-rich scenes, addressing the methodological limitations of prior models, which often rely on fixed query embeddings, thus limiting their scene understanding.

The paper demonstrates that by combining query embeddings and patch embeddings directly within the LLM input space, the model significantly improves text-rich visual perception. This is quantitatively corroborated by notable performance enhancements across several evaluations. Specifically, BLIVA achieves up to a 17.76% performance boost on the OCR-VQA benchmark and a 7.9% improvement in Visual Spatial Reasoning tasks over its precursor, InstructBLIP.

Performance Validation and Implications

BLIVA's effectiveness is measured against both text-rich and general VQA datasets. The results detailed in their experiments show that BLIVA outperformed existing models like mPLUG-Owl, LLaVA, and others on several challenging benchmarks, demonstrating superiority particularly in text-rich scenarios. The introduction of the YouTube thumbnail dataset, YTTB-VQA, further illustrates the model’s broad-ranging applicability to industry-relevant tasks—highlighting its potential for real-world deployments involving complex visual data.

The methodology employed by BLIVA proves vital in enhancing the interpretative capacity of VLMs, paving the way for more intricate interactions between AIs and visually-driven textual data. The inclusion of patch embeddings in tandem with learned query embeddings introduces a framework that can be readily adapted and scaled across varying LLM architectures, emphasizing its utility in multimodal instruction tuning.

Theoretical Insights and Future Directions

This research sheds light on the limitations of contemporary LLMs in dealing with text-rich visuals, offering a robust solution that could inspire future developments in the field. The architecture of BLIVA, emphasizing the importance of a multimodal instruction tuning paradigm, points toward a future where models could potentially autonomously adjust their embedding strategies based on the nature of the visual inputs.

The paper’s contributions also open avenues for exploring more efficient and scalable training paradigms that leverage diverse data sources, such as instructional data meta-learned across different modalities. Additionally, the possibility of extending BLIVA’s architecture to other modalities, beyond imagery, could be an exciting research directive, aligning with concurrent advances in general-purpose AI agents.

In conclusion, the research offers a substantial advancement in the multimodal AI research space by providing tangible improvements in text-rich VQA tasks. As LLMs continue to integrate more complex data types, BLIVA's principles may well inform the design of the next generation of AI models, achieving a more nuanced understanding of our multimodal world.