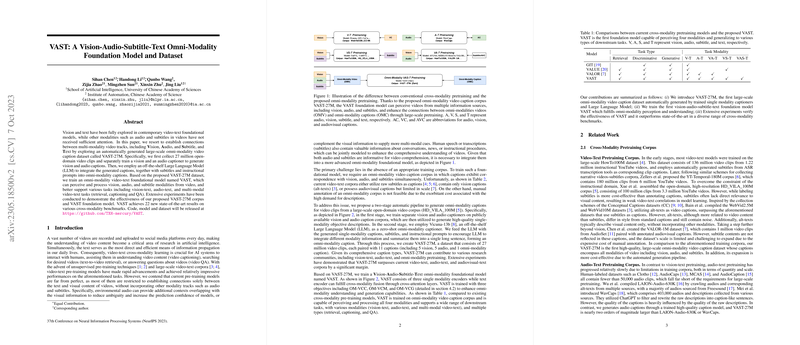

The paper "VAST: A Vision-Audio-Subtitle-Text Omni-Modality Foundation Model and Dataset" (Chen et al., 2023 ) introduces a new large-scale dataset, VAST-27M, and a foundation model, VAST, designed to improve video understanding by incorporating vision, audio, and subtitle modalities alongside text. Existing video-text models often focus solely on vision and text, neglecting other valuable information streams present in videos like audio (environmental sounds, speech) and subtitles (transcriptions, dialogue). VAST aims to bridge this gap by enabling a more comprehensive understanding of video content through large-scale pretraining on multi-modal data.

The core contribution is the VAST-27M dataset, which comprises 27 million video clips, each paired with vision, audio, subtitle, and a novel omni-modality caption. The dataset is automatically generated using a two-stage pipeline. First, separate vision and audio captioners are trained on existing single-modality datasets. These captioners are then used to generate descriptions for the visual and audio content of video clips sampled from the HD_VILA_100M dataset [xue2022advancing]. In the second stage, an off-the-shelf LLM, specifically Vicuna-13b [vicuna2023], acts as an omni-modality captioner. It takes the generated single-modality captions, raw subtitles, and instructional prompts as input and synthesizes them into a single, coherent omni-modality caption. This automated generation process allows for scaling the dataset creation efficiently compared to manual annotation, addressing the challenge of obtaining large-scale multi-modal video-text data. VAST-27M provides 11 captions per video clip (5 vision, 5 audio, 1 omni-modality), totaling 297 million captions, significantly larger and more diverse in modality coverage than prior corpora like HowTo100M miech2019howto100m or WebVid bain2021frozen. The automatically generated vision and audio captions are shown to be of higher quality for training modality-specific tasks compared to raw subtitles or existing auto-generated datasets like WavCaps [mei2023wavcaps] when evaluated on downstream tasks.

Based on this dataset, the VAST foundation model is trained. VAST utilizes a Transformer-based architecture consisting of three encoders: a vision encoder (initialized from EVAClip-ViT-G [sun2023eva]), an audio encoder (initialized from BEATs [chen2022beats]), and a text encoder (initialized from BERT-B [devlin2018bert]). The text encoder also incorporates cross-attention mechanisms to fuse information from different modalities. The model processes raw video frames, audio spectrograms, and tokenized subtitles and captions. For pretraining, VAST is trained with three objectives:

- Omni-Modality Video-Caption Contrastive Loss (OM-VCC): This objective aligns the global representations of the omni-modal video (concatenation of subtitle, vision, and audio global features) and the omni-modality caption in a shared semantic space using a contrastive loss, similar to CLIP [radford2021learning].

where is the -th omni-modal video global representation, is the -th omni-modality caption global representation, is batch size, is dot product, and is a learnable temperature.

- Omni-Modality Video-Caption Matching Loss (OM-VCM): This binary classification task predicts if a given video and caption pair is matched. It utilizes cross-attention where the text encoder attends to the concatenated unpooled features of all modalities, providing fine-grained interaction. Hard negative mining is used to create challenging negative pairs.

where for a matched pair and 0 otherwise, and is the predicted probability.

- Omni-Modality Video Caption Generation Loss (OM-VCG): This is a conditional masked LLMing task. The model predicts masked tokens in the omni-modality caption conditioned on the video features (via cross-attention) and unmasked caption tokens (via causal self-attention).

where are masked tokens and are tokens before the masked ones.

To handle scenarios where some modalities might be absent in downstream tasks, VAST incorporates a "Modality Grouping" strategy during training. This involves training on relations between text and various combinations of video modalities, including V-T, A-T, VA-T, VS-T, and VAS-T, using corresponding vision, audio, and omni-modality captions. This ensures robustness and generalizability across different task setups. The overall loss is a weighted sum of losses for all considered modality groups and objectives.

For practical implementation, VAST has 1.3B parameters. It was trained on 64 Tesla V100 GPUs for 205K steps on a combined corpus including VAST-27M, VALOR-1M [chen2023valor], WavCaps [mei2023wavcaps], and large-scale image-text datasets (CC14M, LAION-400M subsets). Image-text data captions were replaced with those generated by the vision captioner for consistency. Specific hyperparameters for downstream task fine-tuning are provided in the appendix, including learning rates, batch sizes, epochs, and the number of video frames and audio clips sampled.

VAST demonstrates strong performance across a wide range of downstream benchmarks, achieving state-of-the-art results on 22 benchmarks covering vision-text, audio-text, and multi-modal video-text tasks like retrieval, captioning, and question answering. Notably, it shows significant improvements on audio-text benchmarks due to the scale and quality of audio captions in VAST-27M and excels on multi-modal benchmarks requiring reasoning over audio and/or subtitles, such as YouCook2 [zhou2018towards], VALOR-32K [chen2023valor], and TVC [lei2020tvr]. Ablation studies confirm the benefit of the omni-modality captions generated by the LLM and the effectiveness of multi-modal pretraining and the modality grouping strategy for improving performance and generalization.

The practical applications of VAST are broad, including enhanced video search and recommendation (e.g., finding videos based on sounds or dialogue), automatic generation of detailed video descriptions for accessibility or content summarization, and more robust video question answering systems that can leverage all available information streams. Developers can leverage the pre-trained VAST model as a foundation for building applications requiring complex video understanding. Fine-tuning on specific domain datasets can adapt VAST to tasks like educational video analysis, surveillance footage interpretation, or entertainment content processing. Computational requirements for training are substantial (large-scale distributed training), but inference and fine-tuning on smaller datasets would be more manageable, depending on the model size and the number of modalities used. Potential limitations include the dependency on the quality of auto-generated captions and subtitles, which might inherit biases from the source data and LLM, and the need for further integration with larger LLMs for more advanced generation capabilities. The release of the dataset and model aims to facilitate further research in omni-modal video understanding.