Improving Zero-shot and Few-shot Learning of LLMs via Chain-of-Thought Fine-Tuning

Introduction to Cot Collection

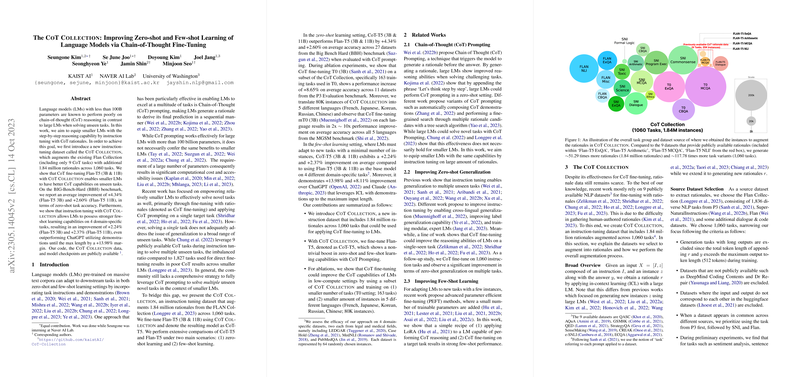

The COT COLLECTION dataset aims to enhance the reasoning capabilities of smaller LLMs (LMs) by facilitating Chain-of-Thought (CoT) prompting and fine-tuning. It incorporates 1.84 million rationales across 1,060 tasks, supplementing the previous Flan Collection which included merely 9 CoT tasks. This strategic extension is designed to offset the inadequacies smaller LMs face in zero-shot and few-shot learning scenarios when compared to their larger counterparts.

The Origin and Composition of COT COLLECTION

COT COLLECTION is introduced against the backdrop of previous works that have highlighted the challenge in applying CoT prompting to smaller LMs effectively. Its inception is rooted in the need for a substantial dataset that can cater to instruction tuning with CoT rationales, aimed at equipping smaller LMs with an enhanced step-by-step reasoning ability. Distinctively, the dataset not only augments the Flan Collection by magnitudes but also introduces rationales spanning a wide range of tasks, which is imperative for generalization across unseen tasks.

Evaluation and Key Findings

The paper reports on the performance of CoT fine-tuned Flan-T5 models across different scales in both zero-shot and few-shot learning contexts. The evaluation, conducted using the BIG-Bench-Hard (BBH) benchmark, demonstrates notable average improvements in zero-shot task accuracy: +4.34% for Flan-T5 3B and +2.60% for Flan-T5 11B models respectively. Furthermore, in few-shot learning tests across four domain-specific tasks, CoT fine-tuning yielded improvements, outperforming even larger models and demonstrating the efficacy of the approach in enhancing smaller LMs' reasoning capabilities.

Practical Implications and Future Directions

The paper underscores the potential of CoT COLLECTION in bridging the gap between smaller and larger LMs in terms of reasoning and instruction-following capabilities. It opens up avenues for further research into optimizing CoT prompting strategies for a broader set of languages and tasks, especially under low-resource settings. The findings also prompt a reconsideration of the hitherto predominant focus on scale (in terms of model parameters) as the sole driver of performance improvement, highlighting the critical role of diversified training data in achieving generalization.

Conclusive Assessment

Overall, the COT COLLECTION and its associated findings render a significant contribution to the ongoing efforts in refining AI's reasoning and learning capabilities. By demonstrating tangible improvements in both zero-shot and few-shot learning arenas, the dataset not only serves as a valuable resource for further research but also emphasizes the importance of curated, task-specific training data in unlocking the full potential of smaller LMs.