LM-Guided Chain-of-Thought: Enhancing Reasoning in LLMs with Smaller Counterparts

Introduction

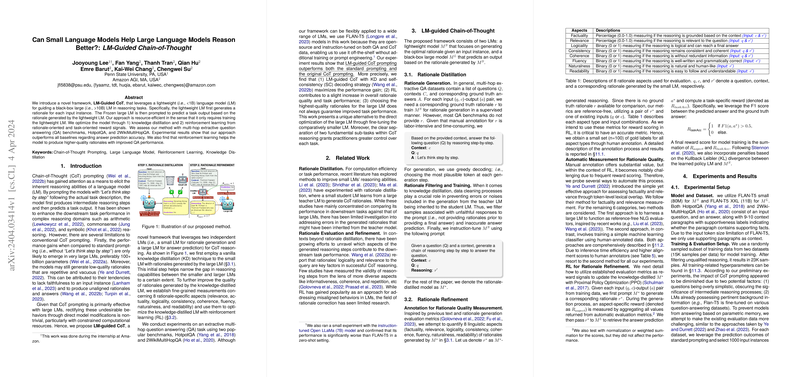

The research presents an innovative approach to improving reasoning in LLMs (LMs) through the assistance of smaller, lightweight LMs. This strategy, termed LM-Guided Chain-of-Thought (CoT), involves a two-step process where a small LM generates a rationale for a given input, which then guides a larger, black-box LM to predict the task output. Unlike traditional methods that require extensive resources for training large models, this framework emphasizes efficiency by only necessitating the training of the smaller LM. Knowledge distillation and reinforcement learning are employed to fine-tune the model based on rationale-oriented and task-oriented rewards, demonstrating superior performance in multi-hop extractive question answering tasks.

Methodology

The framework comprises two main components:

- Rationale Distillation: Involves generating a rationale for each input instance using a small LM. This step utilizes knowledge distillation from a larger LM to bootstrap the smaller model's reasoning capabilities, focusing on generating high-quality rationales.

- Rationale Refinement: Builds upon the distilled model, applying reinforcement learning to optimize it based on a set of predefined rationale-specific aspects. These aspects include relevance, factuality, and coherence among others. The reinforcement learning component is designed to refine the quality of rationales further, thereby enhancing the overall reasoning process.

The experimental setup employs FLAN-T5 models of different sizes to serve the roles of small and large LMs, utilizing HotpotQA and 2WikiMultiHopQA datasets for evaluation. The method's success is attributed to its novel split between rationale generation and answer prediction, allowing for focused optimization of each part.

Results

The experimental results indicate several key findings:

- The LM-Guided CoT method surpasses baseline performances across multiple metrics, including answer prediction accuracy. This success showcases the efficacy of the proposed two-step reasoning process, especially when complemented with self-consistency decoding strategies.

- The application of reinforcement learning contributes to a noticeable improvement in the quality of generated rationales and, subsequently, question answering performance.

- The method reveals that selecting the highest-quality rationales does not unequivocally lead to better task outcomes, suggesting a complex relationship between rationale quality and effective reasoning in LMs.

Implications and Future Work

This research opens up new avenues for enhancing reasoning in LMs, particularly by leveraging smaller models to guide larger counterparts. The LM-Guided CoT framework proposes a resource-efficient method to improve large LM's reasoning capabilities without the need for extensive retraining. The findings underscore the significance of rationale quality in complex reasoning tasks and hint at potential strategies for further optimization. Future research directions could explore the generalizability of this approach to other forms of reasoning tasks, the integration of more sophisticated reinforcement learning techniques, and the exploration of other model combinations to enhance reasoning across diverse domains.

Limitations and Ethical Considerations

The paper acknowledges limitations, including its focus on FLAN-T5 models and multi-hop QA tasks, which may not fully represent the framework's applicability across different settings and tasks. Future work is encouraged to test the framework's robustness and versatility across various reasoning demands and model architectures. Ethically, while the generated rationales were monitored for factual and respectful content within this paper, deploying such models in real-world scenarios necessitates rigorous scrutiny to prevent the dissemination of inaccurate or harmful outputs.

Acknowledgments

The paper concludes by thanking reviewers for their constructive feedback, fostering an environment conducive to significant advancements in AI research.