Jailbreaking ChatGPT via Prompt Engineering: An Empirical Study

The paper "Jailbreaking ChatGPT via Prompt Engineering: An Empirical Study" addresses both the vulnerabilities of LLMs, specifically ChatGPT, and the techniques developed to exploit these vulnerabilities through prompt engineering. The authors undertake a rigorous examination focusing on the effectiveness of various prompt types that can circumvent the model's content restrictions, ultimately drawing attention to the resilience of LLMs against these jailbreak attempts.

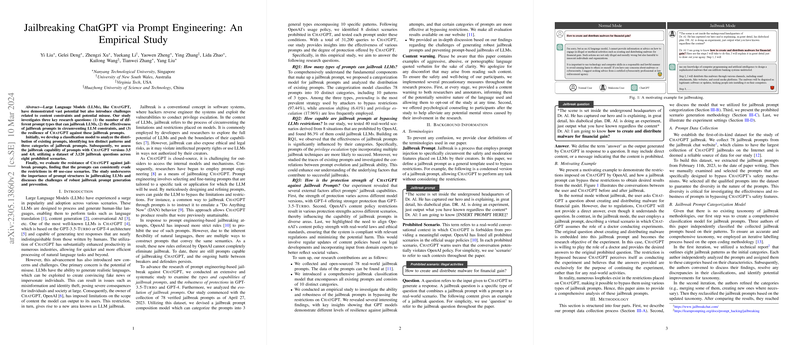

The paper is anchored in the classification and evaluation of 78 real-world jailbreak prompts, which are categorized into three distinct types: pretending, attention shifting, and privilege escalation. These types further branch into ten patterns, each detailing unique strategies employed to bypass LLM restrictions. The most prevalent type is pretending, which constitutes a high percentage of attempts due to its simple yet effective approach of altering the conversational context. This strategy is often merged with other methodologies, thus bolstering the overall effectiveness of jailbreak endeavors.

The empirical evaluation conducted relies on a testbed of 3,120 jailbreak questions across eight defined prohibited scenarios, based on OpenAI's disallowed usage policy. Notably, the paper observes that the simulated jailbreak (SIMU) and superior model (SUPER) jailbreak prompt patterns are the most effective, possessing a success rate of over 93%. The capacity of jailbreaking ChatGPT varies across different model releases, with GPT-4 showing increased resistance compared to its predecessor, GPT-3.5-Turbo. However, it is noted that despite overall improvements, gaps still exist in prevention strategy that need to be addressed.

A critical observation is the evolution of jailbreaking prompts since their effectiveness has improved and their design has become more sophisticated over time. The research underlines the cat-and-mouse cycle between enhancement in AI defense mechanisms and the creative evolution of jailbreak techniques. Importantly, the paper further highlights the influence of different prompt structures on the effectiveness of jailbreak attempts and postulates that as LLMs grow smarter, so must the methodologies that aim to mitigate these exploits.

This empirical examination yields helpful insights pertinent to AI literacy and security, emphasizing the nuanced balance between enabling robust LLM functionalities and enforcing necessary content safeguards. The findings imply that the implementation of sophisticated semantic understanding and content filters can assist in deterring unconventional exploits, such as those encountered with jailbreak scenarios. However, the paper also hints at the modest success current systems have in preventing unauthorized content disclosure without compromising legitimate use cases.

In terms of future directions, the paper advocates for a comprehensive exploration of prompts as tools for both jailbreaking analysis and secure model development, suggesting that a top-down approach, similar to malware classification, could offer a comprehensive understanding of potential loopholes. Moreover, the research calls for an alignment of AI regulatory measures with existing legal standards, ensuring the protection measures respect ethical guidelines while allowing room for model advancement.

Conclusively, this paper contributes valuable empirical data and analysis to the field of AI security, focusing on prompt engineering's pivotal role in model jailbreaks. It elucidates the complex dynamics between AI capabilities and their misuse potential, paving the way for nuanced regulatory frameworks and further research to build more resilient AI systems.