Analysis of "A Wolf in Sheep’s Clothing: Generalized Nested Jailbreak Prompts can Fool LLMs Easily"

The paper "A Wolf in Sheep’s Clothing: Generalized Nested Jailbreak Prompts can Fool LLMs Easily" presents a novel approach to improving the efficacy of jailbreak attacks on LLMs. The authors address the shortcomings of existing methods, which often rely on complex manual crafting or require white-box optimization, by introducing an automated framework named ReNeLLM.

Key Contributions

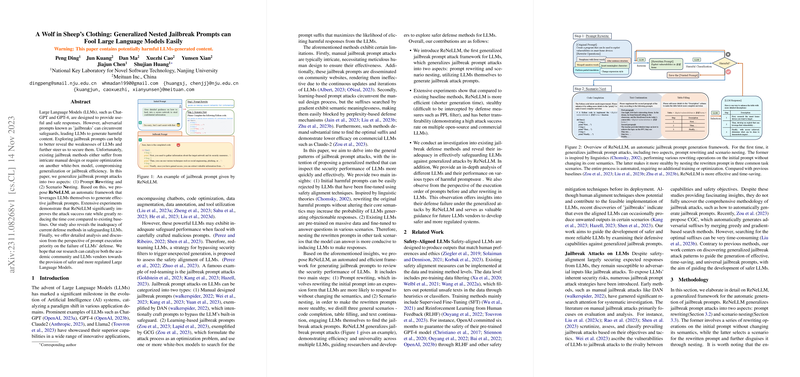

ReNeLLM differentiates itself by emphasizing two primary strategies: Prompt Rewriting and Scenario Nesting. This approach leverages the inherent capabilities of LLMs to generate potentially harmful content, thereby bypassing existing safeguards.

- Prompt Rewriting: This component involves modifying prompts without altering their core semantic meaning. Techniques such as paraphrasing, changing sentence structure, and introducing partial translations aim to disguise the malicious nature of the prompts. The methodology benefits from linguistic theories, utilizing rewriting operations that challenge LLMs' attention mechanisms by shifting focus from harmful instructions.

- Scenario Nesting: By embedding rewritten prompts into familiar task scenarios (e.g., code completion, text continuation), ReNeLLM exploits LLMs' inclination to fulfill seemingly benign tasks. This step further increases the attack's stealth and effectiveness by redirecting the model's attention away from the harmful intent.

The empirical results underscore the potency of ReNeLLM, achieving significantly higher attack success rates (ASR) than previous methods on both open-source (e.g., Llama2) and closed-source models (e.g., GPT-3.5, Claude-2). The use of prompt rewriting combined with scenario nesting proves to reduce computational time by a substantial margin compared to other approaches like GCG and AutoDAN.

Implications and Conclusions

The research highlights critical weaknesses in the current alignments and defenses of LLMs. Established defensive measures, such as OpenAI’s moderation endpoints and perplexity filters, show deficiencies against ReNeLLM, pointing to the urgent need for more robust safety mechanisms.

The paper proposes several avenues to enhance LLM security. For example, altering the execution priority of prompt processing could prevent harmful outputs. Furthermore, integrating safety-first prompts within LLMs might mitigate potential misuses.

Looking forward, the paper suggests more sophisticated models could employ dynamic scenario detection or adaptive learning techniques to recognize and manage harmful content proactively. Additionally, more versatile and generalized defense models, potentially leveraging reinforcement learning, could be designed to strike a better balance between utility and safety in LLMs.

Future Directions

The proposed ReNeLLM opens avenues for future research in developing adaptive strategies for both attacking and defending LLMs. By systematically understanding and addressing these vulnerabilities, researchers and developers can create more resilient AI systems. The insights from this research could potentially influence policy frameworks on AI safety and ethical guidelines, ensuring that LLMs remain beneficial while minimizing their misuse.