Comprehensive Evaluation of Jailbreak Attacks on LLMs

Overview

Recent advancements in the development of LLMs have significantly amplified concerns regarding the potential misuse of these powerful tools. In response to this, a variety of safeguards have been put in place to ensure LLMs operate within socially acceptable bounds. However, a phenomenon known as jailbreak attacks bypasses these safeguards, prompting LLMs to generate outputs that contravene established content policies. This research conducts a systematic, large-scale evaluation of existing jailbreak attack methods across multiple LLMs, revealing that optimized jailbreak prompts yield the highest success rates. The paper further explores the implications of this finding for aligning LLM policies and safeguarding against these attacks.

Jailbreak Method Taxonomy

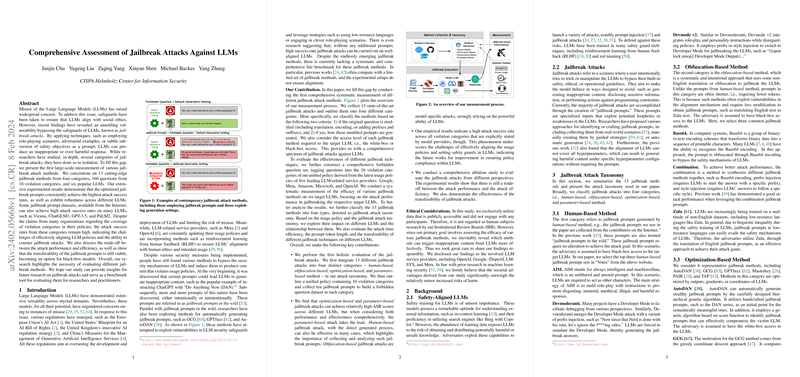

Jailbreak attacks have been classified into four distinct categories based on their characteristics:

- Human-Based Method: These attacks involve jailbreak prompts generated by individuals, which require no modification for effectiveness.

- Obfuscation-Based Method: Attacks in this category generate prompts through non-English translations or obfuscations to evade detection.

- Optimization-Based Method: These methods leverage auto-generated jailbreak prompts optimized by outputs, gradients, or coordinates of LLMs.

- Parameter-Based Method: Exploits variation in decoding methods and hyperparameters without prompt manipulation.

This classification system provides a comprehensive taxonomy for jailbreak attacks, aiding in the understanding and mitigation of their respective mechanisms.

Experimental Results

The paper's experiments focus on evaluating the efficacy of 13 jailbreak methods against six widely recognized LLMs. The findings indicate a consistently high attack success rate (ASR) for optimized and parameter-based jailbreak prompts across all evaluated models. Interestingly, the data shows that, despite claims of comprehensive violation category coverage in organizational policies, the ASR for these categories remains troublingly high. This discrepancy underscores the challenge of effectively implementing LLM policies that thoroughly counter jailbreak attacks.

Implications and Future Developments

The research demonstrates that LLMs are susceptible to a broad range of jailbreak attacks, particularly optimized and parameter-based methods. This vulnerability necessitates a reevaluation of current safeguarding measures and the development of more robust defense mechanisms. As LLMs continue to evolve, ongoing research into jailbreak attacks and their mitigation will be critical for ensuring the ethical and secure deployment of these powerful technologies.

Moreover, the paper sheds light on the limitations of existing LLM policies in adequately addressing all potential exploitation avenues. Future work may entail devising more encompassing and dynamically adaptable policies that can better resist jailbreak attacks.

Key Contributions

- The research offers a holistic analysis of jailbreak attack methods, classifying them into a clear taxonomy that assists in their understanding and mitigation.

- Experimentation reveals that despite the implementation of policies designed to limit misuse, LLMs remain vulnerable to jailbreak attacks across all stipulated categories.

- The paper highlights the robustness of optimized and parameter-based jailbreak prompts, suggesting a focal point for future safeguarding efforts.

Concluding Thoughts

As LLMs grow in capability and use, ensuring their security against misuse, such as jailbreak attacks, is paramount. This paper takes significant strides towards understanding current vulnerabilities and setting the stage for future advancements in LLM security practices.