Insightful Overview of "CODA-Prompt: COntinual Decomposed Attention-based Prompting for Rehearsal-Free Continual Learning"

The academic paper titled "CODA-Prompt: COntinual Decomposed Attention-based Prompting for Rehearsal-Free Continual Learning" presents an innovative approach to addressing the persistent challenge of catastrophic forgetting in computer vision models. Catastrophic forgetting is a significant problem in continual learning, particularly when the model is exposed to continuously evolving datasets and is required to learn new tasks sequentially while retaining prior knowledge. Traditional solutions often involve data rehearsal, which incurs memory overhead and raises privacy concerns. The authors propose a novel alternative leveraging prompt-based methodologies within pre-trained Vision Transformers (ViTs), enhanced by an innovative decomposition of prompts and end-to-end training scheme.

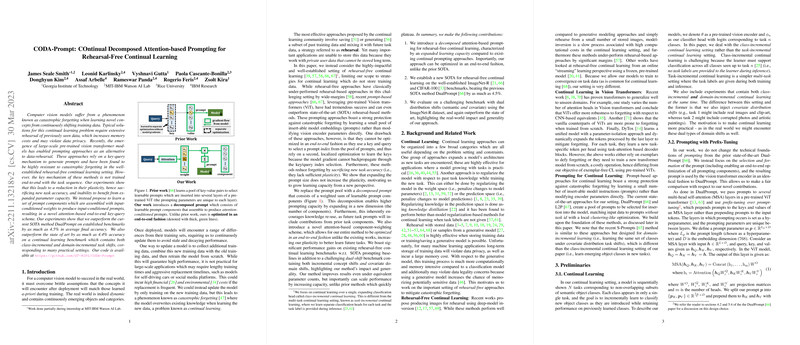

The paper elucidates the limitations of existing prompt-based methods such as DualPrompt and L2P, noting that these approaches suffer due to a lack of end-to-end optimization and constrained capacity to accommodate new tasks dynamically. The authors propose CODA-P, which introduces decomposed attention-based prompting. This involves learning a set of prompt components that are conditionally weighted and assembled, thereby allowing for a scalable and flexible prompt formation that is intrinsically capable of leveraging previous knowledge. This method entails a more efficient key-query mechanism that is fully differentiable with respect to the entire task sequence, thereby enhancing the model's plasticity and overall task performance.

The empirical evaluations demonstrate that CODA-P achieves superior performance compared to state-of-the-art methods. Specifically, on the ImageNet-R dataset, CODA-P surpasses DualPrompt by up to 4.5% in average final accuracy. It also shows notable improvements in a dual-shift benchmark, where both class-incremental and domain-incremental shifts are presented, highlighting its robustness and applicability in practical settings with dual distribution shifts. The benchmark results on CIFAR-100 and DomainNet datasets further affirm the efficacy of CODA-P, positioning it favorably against both rehearsal-based and rehearsal-free counterparts.

From a methodological perspective, one of the key innovations is the introduction of prompt decomposition into components that are weighted based on an attention mechanism. The presence of an orthogonality constraint allows for distinct and non-interfering component learning tasks. This design choice enables the model to expand its capacity in response to task complexity rather than solely relying on prompt length adjustments, thereby overcoming saturation issues observed in existing approaches.

Theoretical implications of this work suggest that future research in continual learning can consider modular architectures and component-based learning as effective strategies in addressing catastrophic forgetting. Practically, CODA-P's approach holds promise for applications in dynamic environments where data privacy is paramount and where models must effortlessly scale to accommodate new tasks without performance degradation.

In conclusion, the CODA-Prompt framework exemplifies a significant stride in rehearsal-free continual learning, fostering advancements in how machine learning models can maintain and expand their knowledge over time. Future research directions may include exploring further extensions to the attention mechanisms employed, integration with different transformer architectures, and benchmarking CODA-P against even more varied and complex real-world datasets.