CodeKGC: A Code LLM for Generative Knowledge Graph Construction

The paper "CodeKGC: Code LLM for Generative Knowledge Graph Construction" introduces an innovative method leveraging code LLMs (Code-LLMs) for the task of generative knowledge graph construction. Unlike traditional approaches that flatten natural language into serialized text, CodeKGC exploits the structured nature of code to improve the encoding and extraction of entities and relationships within knowledge graphs (KGs).

Overview of Methodology

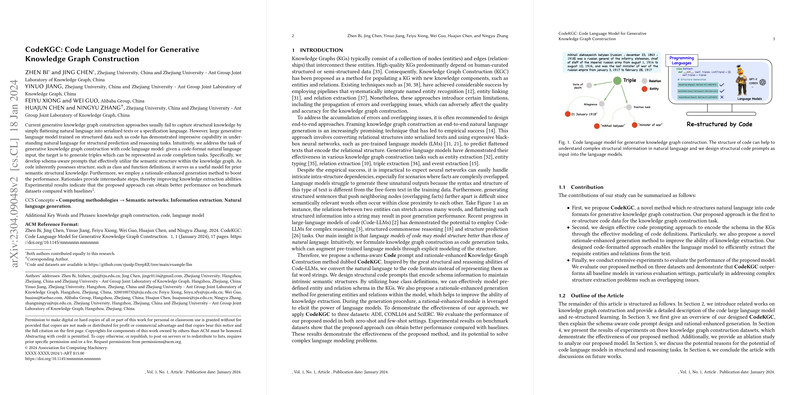

The proposed approach formulates knowledge graph construction as a code generation task. By transforming natural language inputs into a code format, the structure inherent in code is used to model semantic relationships effectively. This method involves:

- Schema-aware Prompt Construction: The paper presents a re-structuring of language using Python's class definitions to capture intrinsic semantic structures of KGs. Here, base classes are defined to represent entities and relations, thereby maintaining explicit schema information.

- Rationale-enhanced Generation: Inspired by the effectiveness of intermediate reasoning steps, the paper introduces an optional rationale-enhanced generation method. This technique enhances the ability of Code-LLMs to deduce complex relationships by providing step-by-step reasoning chains within the code prompts.

Experimental Results

The effectiveness of CodeKGC is demonstrated through comprehensive evaluations on datasets such as ADE, CoNLL04, and SciERC. The results indicate significant improvements in entity and relation extraction tasks. CodeKGC consistently outperforms baseline models, particularly in complex scenarios involving overlapping and intricate structural patterns.

Key findings include:

- Superior performance in relation strict micro F1 scores in both zero-shot and few-shot settings, especially on datasets with multiple overlapping entities and relations.

- Effective handling of hard examples, suggesting stronger capabilities of Code-LLMs in understanding structured semantics compared to traditional text-based prompting techniques.

Contributions and Implications

The paper's contributions are multi-fold:

- It introduces CodeKGC as the first approach to re-structure language data into code formats specifically for the knowledge graph construction task.

- It demonstrates the advantages of schema-aware prompts and rationale-enhanced methods in improving KG extraction performance.

- By evidencing better handling of complex dependency patterns, the paper suggests that Code-LLMs are adept at addressing nuanced structural prediction challenges.

In practical terms, the research opens avenues for integrating Code-LLMs into a variety of structured data tasks beyond knowledge graphs, such as semantic parsing, data-to-text generation, and more. Theoretically, it highlights the potential of leveraging code languages for enhancing the structural comprehension capabilities of LLMs.

Future Directions

The paper calls for further exploration in several areas, including:

- The integration of advanced Code-LLMs to potentially parallel the performance offered by text LLMs enhanced with instruction-tuned data.

- Extending the application of CodeKGC to additional knowledge-intensive tasks, ensuring its broader adaptability.

- Refining code prompt strategies through automated techniques to further capture and utilize nuanced structural representations.

Overall, the research establishes a compelling case for employing code-based paradigms in structured knowledge tasks, reflecting a promising intersection of programming languages with natural language processing advancements.