High-Throughput Mixture of Experts (MoE) Inference on Memory-constrained GPUs

The paper "High-Throughput MoE Inference on Memory-constrained GPUs" addresses the challenges in deploying large-scale Mixture of Experts (MoE) models under limited GPU memory conditions. The Mixture of Experts paradigm in LLMs offers a significant advantage in terms of computational efficiency by activating only a subset of model parameters during inference. Despite their computational benefits, MoE models pose substantial deployment challenges due to their memory requirements, which can vastly exceed those of dense models.

Overview and Contributions

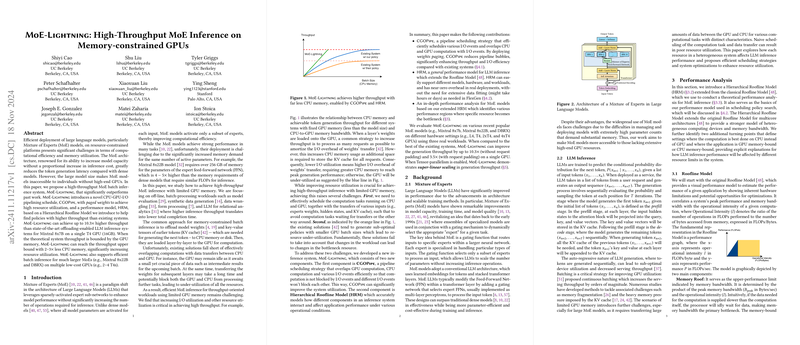

The authors introduce a system named , which is built to achieve high-throughput inference for MoE models even when constrained by GPU memory. The work demonstrates several key innovations and insights:

- Design of a Pipeline Scheduling Strategy (): This strategy maximizes resource utilization by interleaving CPU, GPU, and I/O operations. The scheduling strategy facilitates efficient overlap of computation with data transfer, allowing the system to maintain high throughput without relying on high-end GPUs.

- Hierarchical Roofline Model (): The researchers extend the classical Roofline Model to create a Hierarchical Roofline Model (HRM), which aids in analyzing and predicting the performance of the system across various hardware configurations. This model aligns well with the system's need to dynamically adapt to changes in hardware capacity and constraints.

- Weights Paging Mechanism: The system implements a weights paging mechanism that optimizes the transfer of model weights to the GPU. This mechanism reduces I/O bottlenecks and allows for efficient computation despite limited GPU memory.

- Enhanced Resource Utilization for MoE Models: The proposed system demonstrates a significantly improved throughput for MoE inference tasks as compared to existing methods. Specifically, achieves up to higher throughput than contemporary systems for certain MoE models like Mixtral 8x7B on a T4 GPU.

- Inference and Scalability: The system supports efficient batch inference and is capable of scaling across multiple low-cost GPUs, thereby further optimizing resource use and inference time.

Experimental Insights and Results

The system was rigorously evaluated on various popular MoE models, including Mixtral 8x7B, Mixtral 8x22B, and DBRX, across multiple GPU configurations. The experimental outcomes highlighted that not only does the system outperform existing solutions like FlexGen in throughput (by up to ), but it also achieves superior resource utilization with less CPU memory. When it comes to scaling with tensor-parallelism, the system demonstrates super-linear scaling of throughput, particularly when processing with multiple GPUs.

Implications and Future Directions

The paper's contributions indicate a significant step forward in making efficient use of MoE models accessible even on devices with limited GPU capacity. The Hierarchical Roofline Model and the new scheduling methodology provide a framework that can be further extended—with potential applications spanning more generalized hardware environments.

Future research could focus on incorporating other hardware accelerators, optimizing for disk-based offloading when CPU memory is also constrained, and adapting the performance model to incorporate newer algorithmic innovations like sparse attention mechanisms. Additionally, scaling the system across distributed computing resources and extending its applicability to other forms of neural network architectures would be another field of development.

This research underpins the practical deployment of resource-efficient large-scale LLMs, representing advancements in both theoretical modeling and practical implementation within the field of artificial intelligence.