This paper introduces BLIP-2, a novel vision-language pre-training (VLP) framework designed to be efficient and effective by leveraging readily available, pre-trained unimodal models. The core challenge addressed is the prohibitive computational cost associated with traditional end-to-end VLP methods that train large vision and LLMs from scratch.

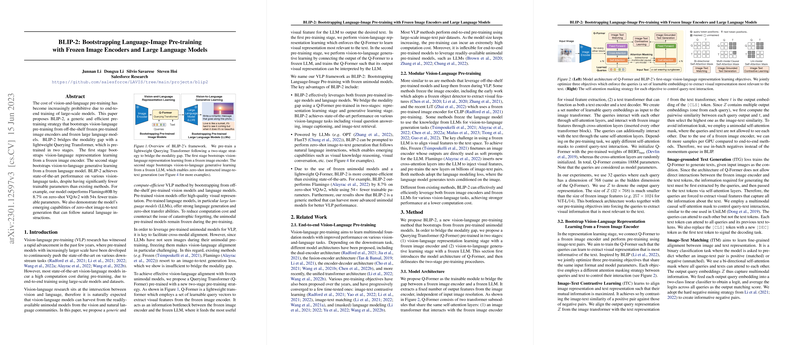

BLIP-2 proposes using frozen pre-trained image encoders and frozen LLMs, significantly reducing the number of trainable parameters and pre-training time. The key innovation is the Querying Transformer (Q-Former), a lightweight module designed to bridge the modality gap between the frozen image encoder and the frozen LLM.

The Q-Former employs a set of learnable query embeddings to interact with the frozen image encoder via cross-attention, extracting visual features relevant to the language modality. It acts as an information bottleneck, feeding concise and relevant visual information to the LLM.

BLIP-2's pre-training strategy for the Q-Former consists of two stages:

- Vision-Language Representation Learning: The Q-Former is connected to the frozen image encoder and trained on image-text pairs. It optimizes three objectives simultaneously:

- Image-Text Contrastive Learning (ITC): Aligns image and text representations by maximizing mutual information using contrastive loss.

- Image-grounded Text Generation (ITG): Trains the Q-Former to output visual representations that enable the generation of the associated text. This forces the queries to capture text-relevant visual information.

- Image-Text Matching (ITM): A binary classification task to predict if an image-text pair matches, learning fine-grained alignment. This stage teaches the Q-Former to extract visual features that are most informative for the corresponding text.

- Vision-to-Language Generative Learning: The Q-Former (with its connected frozen image encoder) is connected to a frozen LLM. A linear layer projects the Q-Former's output query embeddings to match the LLM's input dimension. These projected embeddings act as soft visual prompts prepended to the text input, conditioning the LLM's generation. This stage trains the Q-Former (and the projection layer) so that its visual representations can be interpreted by the frozen LLM, using standard LLMing objectives (e.g., causal LM for decoder models like OPT, prefix LM for encoder-decoder models like FlanT5).

Key Contributions and Findings:

- Efficiency: BLIP-2 drastically reduces the number of trainable parameters compared to end-to-end methods (e.g., 54x fewer than Flamingo80B while outperforming it on zero-shot VQAv2). Pre-training is significantly faster.

- Performance: Achieves state-of-the-art results on various vision-language tasks, including zero-shot VQA, image captioning (especially on out-of-domain datasets like NoCaps), and image-text retrieval.

- Emerging Capabilities: Enables zero-shot instructed image-to-text generation by prompting the frozen LLM with both the visual prompt (from Q-Former) and a text instruction. This allows for tasks like visual conversation, visual knowledge reasoning, and personalized captioning.

- Modularity and Scalability: Demonstrates that performance improves when using stronger frozen image encoders (e.g., ViT-g vs. ViT-L) or larger/better LLMs (e.g., FlanT5 vs. OPT, larger variants within families), validating its ability to leverage future advances in unimodal models.

- Importance of Representation Learning: Ablation studies show that the first pre-training stage is crucial for effective learning and preventing catastrophic forgetting in the LLM during the second stage.

Implementation Details:

- The Q-Former uses a BERT-base initialization (188M parameters) with randomly initialized cross-attention layers. It typically uses 32 learnable queries.

- Experiments utilize ViT-L/14 and ViT-g/14 as frozen image encoders and OPT and FlanT5 families as frozen LLMs.

- Training uses standard large-scale image-text datasets (COCO, VG, CC, SBU, LAION) with caption filtering.

Limitations:

- The model did not exhibit strong in-context few-shot learning capabilities, potentially due to the pre-training data format (single image-text pairs).

- It inherits potential risks from the frozen LLMs, such as generating inaccurate, biased, or harmful content.

In conclusion, BLIP-2 presents an efficient and effective method for vision-language pre-training by bootstrapping from frozen unimodal models via a lightweight Q-Former trained in two stages, achieving strong performance and enabling novel zero-shot instructed generation capabilities.