Multimodal Few-Shot Learning with Frozen LLMs

The paper presents a novel method called "Frozen," which enhances large-scale, pre-trained LLMs (LMs) to facilitate multimodal few-shot learning. Utilizing auto-regressive transformers, these LLMs traditionally excel at learning tasks with minimal examples (few-shot learning) in purely textual contexts. The research extends this capability to a multimodal setup, involving both vision and language, without updating the LLM's weights.

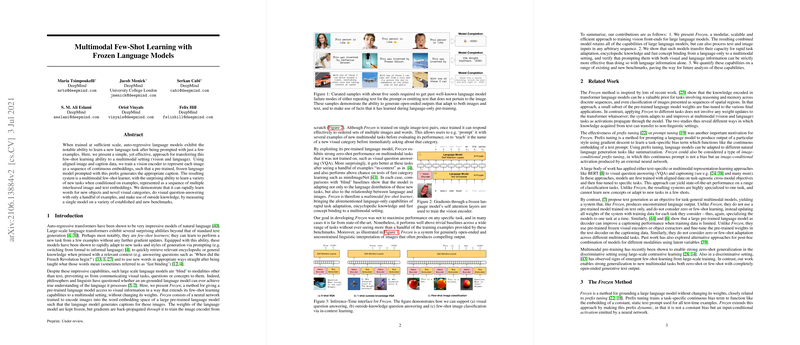

The approach leverages aligned image-caption datasets to train a vision encoder that translates images into sequential continuous embeddings. These embeddings, when provided to a frozen LLM as prefixes, allow the LM to generate appropriate captions and understand various new tasks quickly. This setup does not require alteration of the pre-trained LM’s parameters, thereby maintaining its inherent capabilities while extending its functionality to understand images.

Key Contributions and Findings

- Integration of Vision and Language:

- The method encodes images into word embedding space understood by a pre-trained LM. This setup facilitates the captioning of images while keeping the LM's weights unchanged, relying on a trained vision encoder.

- Few-Shot Learning in a Multimodal Context:

- The model exhibits few-shot learning capabilities across multiple tasks. It shows potential in rapidly learning new words for objects and novel visual categories, offering a few-shot solution to visual question answering (VQA) challenges. The experiments demonstrate zero-shot and few-shot adaptation by the model on benchmarks like VQAv2 and miniImageNet.

- Effectiveness Across Modalities:

- The approach shows that prompting the model with both visual and linguistic information yields better results than either alone. This effective cross-modal transfer highlights the model's capability to rapidly adapt and integrate new knowledge into its language architecture.

- Transfer of Encyclopedic Knowledge:

- By drawing on the encyclopedic knowledge encoded during LM training, the model excels in tasks requiring external knowledge, demonstrated in datasets like OKVQA.

- Limitations and Comparisons:

- While not achieving state-of-the-art results in every specific task, the model maintains substantial above-trivial baseline performance across tasks, signifying its robust generalization abilities without extensive task-specific training.

- The paper compares models with different LM fine-tuning strategies, underscoring the advantage of a frozen LLM in scenarios with constrained multimodal data.

Implications and Future Directions

The implications of this research span both practical applications and theoretical explorations in AI. Practically, it offers a modular framework enabling LMs to tackle multimodal tasks without comprehensive retraining, proving beneficial for applications in rapidly changing environments or scenarios with limited data. Theoretically, it demonstrates a successful extension of LMs’ rapid adaptation and few-shot learning capabilities to multimodal settings.

Future research could explore enhancements by integrating more elaborate architectures or mixing modalities more efficiently. Additionally, the approach invites further exploration into training with increasingly large and diverse datasets, potentially yielding stronger multimodal performance. The benchmarks proposed, like Open-Ended miniImageNet and Fast-VQA, will serve as testing grounds for subsequent advances in multimodal systems.

In conclusion, "Frozen" marks a significant step towards flexible and efficient multimodal AI systems, laying the groundwork for further innovations in the seamless integration of vision and language understanding.