Overview of "Extreme Multi-Task Scaling for LLM Instruction Meta Tuning"

The paper presents a comprehensive paper on scaling LLMs for instruction meta-tuning across a diverse set of benchmarks. This research explores the intricacies of training NLP models on multiple tasks through a meta-tuning strategy, which focuses on enhancing the generalization capabilities of these models over new and unseen tasks. The authors introduce a robust experimental framework that leverages various LLM architectures and multiple benchmark datasets to assess the performance of instruction-tuned models.

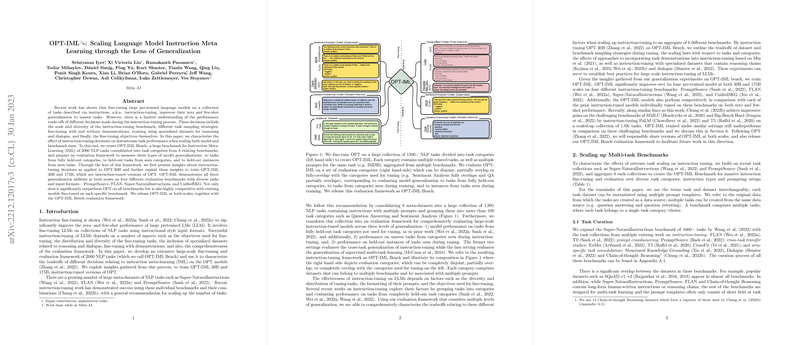

The methodology involves curating a diverse set of NLP tasks from existing benchmarks such as Super-NaturalInstructions, PromptSource, and ExMix, among others. This multi-task setup is crucial to understanding how instruction tuning can influence model performance, particularly when the model encounters novel tasks.

Key Experimental Findings

The paper's experimental results span several model scales, notably 1.3B, 30B, and 175B parameter models. The authors report improvements in task performance by implementing instruction metatuning, indicating that instruction tuning is beneficial across different model scales. Specifically, they observe consistent gains in zero-shot and few-shot settings, with the 175B parameter model showing the most significant improvement across a variety of NLP tasks.

Key findings include:

- Strong Performance: Instruction-tuned models significantly outperform their non-tuned counterparts across a range of standard NLP tasks, as shown in the task benchmark results.

- Scaling Effects: Larger models tend to leverage the benefits of instruction tuning more effectively, likely due to their inherent capacity to generalize through massive parameter scales.

- Impact on Reasoning Tasks: Incorporating reasoning datasets as part of the tuning process led to measurable improvements, suggesting that instruction tuning can also enhance the model's logical reasoning capabilities.

Implications and Future Directions

The implications of instruction meta-tuning for practical applications in AI are substantial. By enhancing the generalization capabilities of NLP models, this research paves the way for more robust AI systems that can adapt to a variety of real-world scenarios with minimal retraining. This adaptability is crucial for deploying AI solutions across different industries where task specifications may dynamically change.

Theoretical advancements from this research include a deeper understanding of how multi-task learning paradigms can be effectively scaled to utilize vast datasets and diverse task types. This work also sets a precedent for future explorations into optimizing instruction tuning processes, potentially through novel optimization techniques or data augmentation strategies.

The authors speculate that further research could explore:

- Cross-Linguistic Application: Adapting instruction tuning for multilingual models might uncover paths for developing more universally applicable LLMs.

- Fine-Grained Task Clustering: Elaborating on the clustering strategy for task types to better tailor instruction tuning to specific subtasks could enhance model performance further.

- Efficiency Improvements: Investigating ways to reduce computational overhead during model scaling while maintaining high performance.

In summary, this paper contributes significantly to the discourse on effectively tuning large-scale LLMs through instruction-based paradigms, showcasing improvements in both model generalization and task-specific performance metrics. It opens avenues for future research on scalable, multi-task capable NLP systems adaptable to a broad range of tasks and applications.