Instruction Pre-Training: LLMs as Supervised Multitask Learners

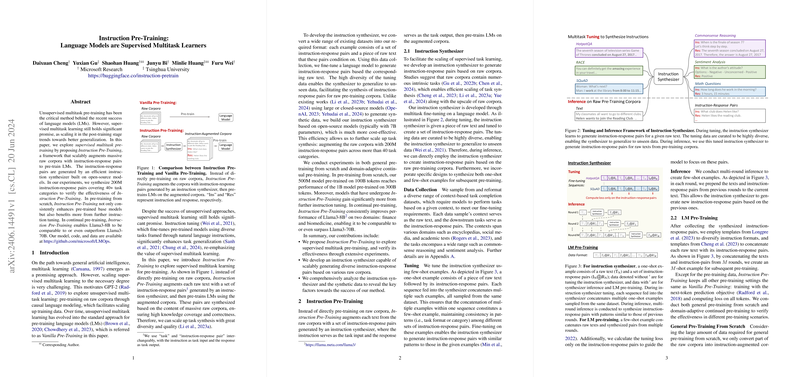

In the paper titled "Instruction Pre-Training: LLMs are Supervised Multitask Learners," Cheng et al. propose a novel framework, Instruction Pre-Training, which augments raw corpora with instruction-response pairs to supervise multitask pre-training of LLMs (LMs). This approach contrasts with the traditional unsupervised multitask pre-training, yielding improved generalization abilities.

The authors integrate supervised multitask learning directly into the pre-training phase by augmenting training data with synthetic instruction-response pairs. These pairs are generated by an efficient instruction synthesizer that utilizes open-source models. Through extensive experimentation, they demonstrate that this method not only enhances the pre-trained models from scratch but also significantly benefits continual domain-specific pre-training.

Instruction Pre-Training Framework

The Instruction Pre-Training framework comprises two major components:

- Instruction Synthesizer: A model fine-tuned to convert raw corpora into instruction-response pairs. The instruction synthesizer leverages a broad range of existing datasets, converting them into instruction-response pairs which it then uses to augment the raw text. This process ensures the generated pairs cover a wide range of task types with high quality and diversity.

- Augmented Data for LM Pre-Training: Instead of pre-training directly on raw text, the LLM pre-trains on the augmented corpus. The raw texts are interspersed with synthesized instruction-response pairs, enabling the model to learn from a vast array of tasks.

Methodology and Implementation

The methodology is validated through various experimental setups:

- General Pre-Training from Scratch: The authors pre-train models with 500M and 1.3B parameters on a subset of the RefinedWeb dataset, comparing their performance to baseline models trained with traditional vanilla methods.

- Domain-Adaptive Continual Pre-Training: The framework is further evaluated by continually pre-training Llama3-8B on corpora from the biomedicine and finance domains, demonstrating marked improvements in domain-specific tasks.

The instruction synthesizer is trained utilizing a highly diverse dataset collection and is capable of generating instruction-response pairs for unseen raw texts. Extensive tuning ensures the synthesizer generalizes well across different data types. The generated instruction-response pairs demonstrate high accuracy and context relevance, effectively facilitating multitask learning during pre-training.

Results and Performance

The empirical results present significant performance gains:

- General Pre-Training: The models pre-trained using Instruction Pre-Training outperform those trained with traditional vanilla pre-training by notable margins on standard benchmarks, such as ARC-e, BoolQ, SIQA, and MMLU. Notably, the 500M parameter model trained on 100B tokens achieves similar or better performance compared to 1B parameter models from other prominent projects trained on significantly more data.

- Instruction Tuning: The pre-trained model benefits more from further instruction tuning, evidenced by improved performance on the MMLU benchmark. The synthesized tasks align well with those encountered during tuning, assisting faster and more effective learning.

- Domain-Specific Continuation: Continual pre-training using Instruction Pre-Training on specialized domains like biomedicine and finance demonstrates performance comparable to or exceeding models an order of magnitude larger, such as Llama3-70B, signifying enhanced domain-specific knowledge acquisition.

Implications and Future Directions

The findings in this paper imply several significant directions for future research and practical AI application:

- Supervised Pre-Training: Instruction Pre-Training demonstrates that integrating supervised signals into the pre-training phase can substantially enhance the effectiveness and data efficiency of LLMs.

- Task Diversity and Generalization: By ensuring high-quality, diverse instruction-response pairs, the model generalizes better to a wide range of tasks, both seen and unseen during training.

- Scalability and Efficiency: Utilizing open-source models for instruction synthesis makes this approach more accessible and cost-effective, potentially democratizing access to advanced pre-training methods.

The theoretical implications revolve around the understanding of multitask learning and the inductive biases introduced through instruction-response pairs. Practically, this framework can inspire new protocols in pre-training LMs, especially in scenarios requiring extensive multitasking abilities and domain-specific expertise.

Conclusion

Cheng et al.'s "Instruction Pre-Training" showcases a substantial stride in leveraging supervised multitask learning for LLM pre-training. The framework's ability to augment raw corpora with synthetically generated instruction-response pairs marks a significant enhancement in both the efficiency and effectiveness of LLM pre-training. Future research can expand on this by exploring larger-scale applications and fine-tuning the instruction synthesis process to further improve the quality and impact of the generated sequences.