Exploring the Limits of Masked Visual Representation Learning at Scale

The paper "Exploring the Limits of Masked Visual Representation Learning at Scale" introduces a vision-centric foundation model and investigates the potential of scaling masked image modeling (MIM) for visual representation learning. The approach employs a vanilla Vision Transformer (ViT) architecture pre-trained to reconstruct masked image-text aligned vision features, leveraging this as a pretext task. This exploration enables the model to scale to one billion parameters, demonstrating remarkable performance across a wide array of downstream tasks without heavily relying on supervised training.

Methodology

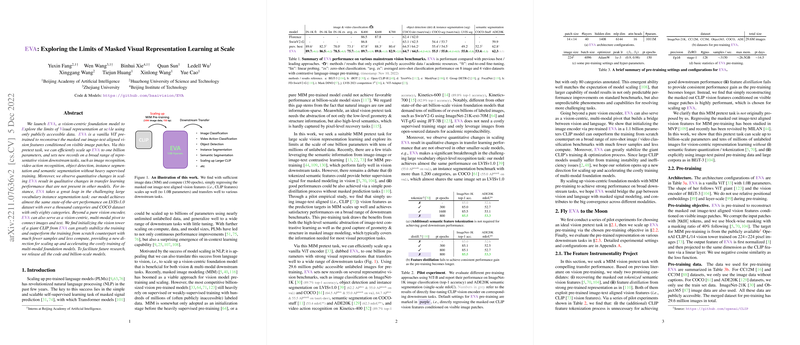

The pre-training focuses on MIM, employing image-text aligned features, particularly CLIP-generated features, as prediction targets. This choice is rooted in an empirical investigation that showed the superiority of using CLIP features directly for masked prediction over alternative approaches like feature tokenization or feature distillation. The model architecture remains a vanilla ViT, which simplifies and streamlines the overall design, focusing on fundamental scaling principles without ornate modifications.

The authors emphasize the use of publicly available datasets, training the model on 29.6 million unlabeled images. This approach underscores the feasibility of achieving high performance without proprietary datasets and supervised labels, emphasizing reproducibility and accessibility.

Performance and Results

In evaluating downstream tasks, the model, referred to as EVA, surpasses existing benchmarks across image recognition, object detection, semantic segmentation, and video action recognition. Notably, in large vocabulary tasks such as the LVIS instance segmentation, the model achieves nearly equivalent performance on datasets with varying category sizes, showcasing its adaptability and robustness at scale. On the COCO and LVIS benchmarks, EVA demonstrates that large models can overcome previous performance gaps attributed to challenging real-world scenarios.

The model's robustness is further highlighted through comparisons on ImageNet variants, showing a minimal performance drop, thereby indicating strong generalization capabilities. This robustness extends to video action recognition, where the model sets new records on Kinetics datasets, further bolstering its suitability across multiple modalities.

Vision as a Multi-Modal Pivot

Beyond its function as a visual encoder, EVA serves as a vision-centric pivot in multi-modal learning environments, notably CLIP models. By initializing a large-scale CLIP model with a pre-trained EVA vision tower, the training process is significantly stabilized and accelerated, utilizing fewer resources and attaining superior zero-shot classification results.

Implications and Future Directions

The results suggest that MIM pre-training can be effectively scaled to billion-parameter models, achieving state-of-the-art performance without extensive supervision. This work implications reach beyond visual recognition, influencing the trajectory of multi-modal models, particularly in contrastive language-image pre-training domains. By bridging vision and language, EVA hints at future avenues where interleaved masked modeling and contrastive learning can further push the boundaries of AI capabilities.

Overall, the paper contributes a compelling case for large-scale MIM and its potential in both vision-specific and multi-modal applications, advancing the conversation on scalable and efficient AI model training.