Essay: InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks

The exponential rise of LLMs has driven significant advancements in multi-modal artificial general intelligence (AGI) systems, yet the development of vision and vision-language foundation models has lagged behind. This disparity is addressed by the research paper on InternVL, which presents a scalable vision-language foundation model designed to align with LLMs, using an extensive array of web-scale image-text data.

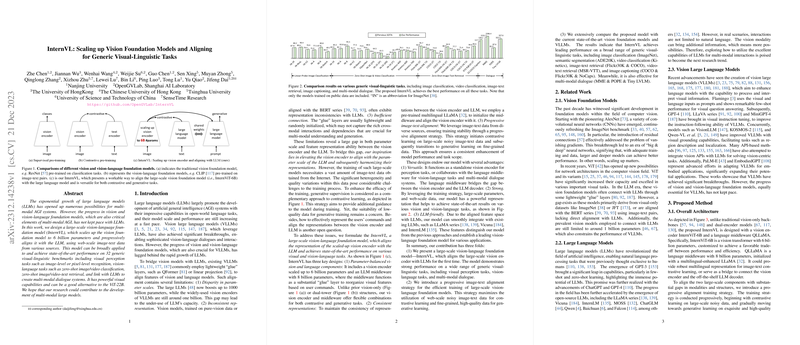

InternVL features a vision encoder, InternViT-6B, scaled up to six billion parameters, and is aligned with a multilingual LLaMA-based language middleware, QLLaMA. This combination supports a wide range of visual-linguistic tasks such as image and video classification, retrieval tasks, image captioning, and more, demonstrating state-of-the-art performance across 32 established benchmarks.

The paper highlights several key aspects:

- Vision-Language Alignment: InternVL utilizes a progressive training strategy to align vision encoders with LLMs. This involves an initial contrastive learning phase on a massive dataset, followed by a generative learning phase on refined data, bridging a significant gap in parameter scales and feature representations between vision encoders and LLMs.

- Scalable Architecture: The vision encoder, InternViT-6B, provides a considerable scale with an innovative design optimized for balanced parameter efficiency. Compared to other models, InternViT-6B delivers superior results in linear evaluation on tasks such as image classification, demonstrating notable improvements over preceding state-of-the-art methodologies.

- Multilingual Capabilities: The pre-trained multilingual LLaMA initialization of QLLaMA offers robust multilingual support, an advantageous feature for global applications involving various languages, as exhibited in several multilingual benchmarks.

- Efficacy in Zero-Shot Learning: InternVL has demonstrated high competence in zero-shot learning scenarios, notably in classification and retrieval tasks. This versatility and capability stem from the comprehensive and diverse training undertaken using web-scale data, which broadens the range of generalization in unseen contexts.

- Compatibility with LLMs: A well-aligned feature space facilitates effortless integration with current LLMs, such as LLaMA, Vicuna, and InternLM. This compatibility is quantitatively supported by strong performance in visual perception, semantic segmentation, and other pixel-level tasks.

Numerically, InternVL achieves top-tier performance in tests such as image classification with an average consistency in results across various benchmarks, and it notably excels in video classification and retrieval tasks. For instance, in comparison to EVA-02-CLIP-E+, InternVL delivers enhanced accuracy and consistency across derived datasets, emphasizing its resilient and adaptable architecture.

The implications of these findings are substantial for the advancement of VLLMs. By demonstrating that scaled-up, parameter-efficient vision models can align with LLMs and deliver robust performance across diverse tasks, InternVL sets a precedent for further exploration in AGI systems. Moving forward, extending such alignment methods to additional modalities and enhancing integration efficiencies will be pivotal in progressing toward fully multi-modal AGI frameworks.

In conclusion, InternVL provides significant insights into vision-LLM scaling and alignment, effectively bridging the existing gap with LLM capabilities. This contributes a critical stepping stone for leveraging web-scale data in developing comprehensive visual-linguistic models, with potential implications on both theoretical research and practical implementations in AI-driven applications.