Analyzing "Ask Me Anything: A Simple Strategy for Prompting LLMs"

The paper "Ask Me Anything: A Simple Strategy for Prompting LLMs" presents a methodology, referred to as Ask Me Anything Prompting (AMA), to enhance the efficacy of LLMs through strategic prompt aggregation without additional training. This research addresses the inherent brittleness of LLM prompting, where slight changes can lead to significant performance variations.

Prompt Sensitivity and Aggregation Approach

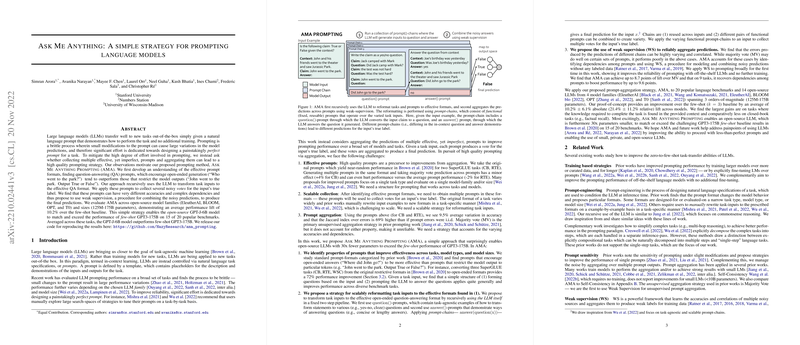

The paper identifies both the challenges and opportunities associated with LLM prompting. Typically, designing effective prompts is labor-intensive and task-specific. AMA mitigates this by aggregating multiple imperfect prompts into a cohesive strategy. The core idea is to leverage open-ended question-answering prompts over restricted-output formats, which has shown to improve performance due to their alignment with the LLM's pretraining objectives.

AMA Methodology

Prompt Creation and Aggregation: AMA utilizes a recursive process involving $\question$ and $\answer$ prompts. These functional prompts transform inputs into QA formats and gather varied votes for a final decision. The aggregation relies on weak supervision (WS) to combine predictions, addressing inconsistent accuracies and dependencies across prompts, unlike the simple majority vote.

Empirical Findings: Testing across 20 benchmarks, AMA demonstrated an average performance lift of 10.2% over baseline few-shot methods. Notably, AMA enabled a smaller, open-source model (GPT-J-6B) to match or exceed the few-shot abilities of GPT-3-175B on most tasks. The success was particularly pronounced on tasks requiring the synthesis of contextual information rather than purely relying on memorized knowledge.

Results Across Models and Tasks

The experiments encompassed diverse LLMs from EleutherAI, BLOOM, OPT, and T0, revealing consistent improvements across NLU, NLI, classification, and QA tasks. AMA's performance was notably superior on tasks where explicit context utilization was crucial, whereas gains were modest on those dependent on memorized facts.

Exploring Prompt Formats

A critical insight from the research is the effectiveness of QA-style prompts. Through analysis of The Pile corpus, the authors observed that QA structures appeared significantly more frequently in pretraining data than restricted formats. This suggests an alignment with pretraining that could explain the enhanced performance of open-ended prompts.

Future Implications

The AMA strategy highlights the potential of aggregation over meticulously designed individual prompts. By modeling prompt dependencies with WS, AMA adapts to varied task-specific and model-specific requirements without further fine-tuning. This flexibility presents an avenue for optimizing resource constraints, particularly for smaller models or when privacy is a concern.

Theoretical and Practical Impact

The paper contributes both practically and theoretically by balancing prompt-derived signal and latent dependencies. It provides a framework that can scale LLM functionality across broader applications with minimal manual intervention. This approach underscores a shift toward scalable prompt strategies that leverage inherent LLM capabilities, advocating for further exploration into aggregation methodologies and pretraining alignment.

AMA's insights lay foundational groundwork for more robust and adaptive interactions with LLMs, refining the bridging between static pretraining and dynamic, task-oriented deployment.